AI Filmmaking: Tools, Workflows, and Production Guide (2025)

Share this post:

AI Filmmaking: Tools, Workflows, and Production Guide (2025)

Last updated: November 11, 2025

AI filmmaking applies generative and assistive models across the complete production pipeline from planning and shot generation through editorial assembly and final delivery. The technology enables creators to execute production quality workflows without traditional crew, equipment, or location requirements.

This guide covers repeatable workflows, tool selection criteria, realistic cost and time benchmarks, and integration strategies for AI powered production. All information reflects current capabilities as of November 11, 2025.

What AI Filmmaking Means in Practice

AI filmmaking uses machine learning models to generate, enhance, or automate specific production tasks. The workflow typically includes text-to-video generation for primary coverage, image-to-video for inserts and effects, voice synthesis for dialogue and narration, sound effect generation, and AI assisted editing.

The technology does not replace traditional filmmaking. Instead, it provides alternative production methods suited to specific content types, budgets, and timelines. Narrative shorts, educational content, marketing videos, and concept visualization represent current practical applications.

Recent developments in video generation models demonstrate current capabilities in cinematic video generation with integrated audio synthesis. Models like Google Veo 3 (released May 2025) and Veo 3.1 (released October 15, 2025), Kling AI v2.1, and Luma's Dream Machine produce 5-10 second clips with photorealistic quality, natural motion, and controllable camera movements.

The distinguishing factor in AI filmmaking is not any single tool but rather the complete workflow from concept through delivery. Generating individual clips represents only one stage. The complete pipeline requires planning for consistency, managing iterative generation, handling multiple modalities, and assembling final output in a standard editing environment.

End to End Production Workflow

A repeatable AI filmmaking workflow follows six distinct stages. Each stage has specific inputs, outputs, and quality checkpoints that determine whether to proceed or iterate.

Stage 0: Scene Planning and Continuity

Before generating any content, establish a single source of truth for all scene elements. This documentation ensures consistency across generated shots and enables efficient iteration when regenerating specific elements.

Create a scene breakdown that includes character descriptions with specific physical details, wardrobe and props that must remain consistent, environmental settings with lighting conditions, narrative beats with emotional tone, and shot list with coverage types needed.

Use a consistent prompt schema across all generation tasks. The format should specify:

Subject: [character description with specific details]

Action: [what happens in the shot]

Framing: [shot size and camera position]

Camera movement: [dolly, pan, tilt, static, etc.]

Lens: [focal length or visual characteristics]

Style: [lighting approach, mood, color palette]

Continuity: [elements that must match previous shots]

Audio: [dialogue, sound effects, ambience]

Duration: [target length in seconds]

Example prompt:

Subject: woman in gray coat and red scarf

Action: walks toward camera, stops, looks up at building

Framing: medium shot transitioning to close-up

Camera movement: dolly in slowly

Lens: 50mm equivalent, shallow depth of field

Style: golden hour lighting, warm tones, cinematic grain

Continuity: same coat and scarf from shot 003

Audio: footsteps on pavement, distant traffic ambience

Duration: 8 seconds

Maintain a look bible that documents visual style decisions, color palettes, reference images for mood, and technical specifications for output format.

Stage 1: Core Shot Generation

Generate primary coverage using text-to-video or image-to-video models. Start with 5-10 second clips optimized for the specific narrative beat. Longer generations increase failure rates and complicate iteration.

For dialogue scenes, generate coverage including wide establishing shots, medium two shots showing character interaction, over the shoulder angles for conversation, and closeups for emotional beats.

Action sequences require spatial continuity across shots. Generate establishing geography shots that show spatial relationships, followed by medium shots of action beats, then closeups for impact moments.

Plan for 3-6 generation attempts per shot before locking the final version. Variables affecting generation success include prompt clarity, model training biases, motion complexity, and consistency requirements.

Document each generation attempt with the exact prompt used, model version and settings, generation timestamp, specific issues observed, and decisions about iteration.

Current generation models work best for moderate paced camera movement, natural human motion and gestures, outdoor and interior environments with clear lighting, and emotional character moments.

Expect challenges with rapid camera movements, complex multiperson choreography, precise object interactions, fast cutting action sequences, and maintaining specific character features across multiple shots.

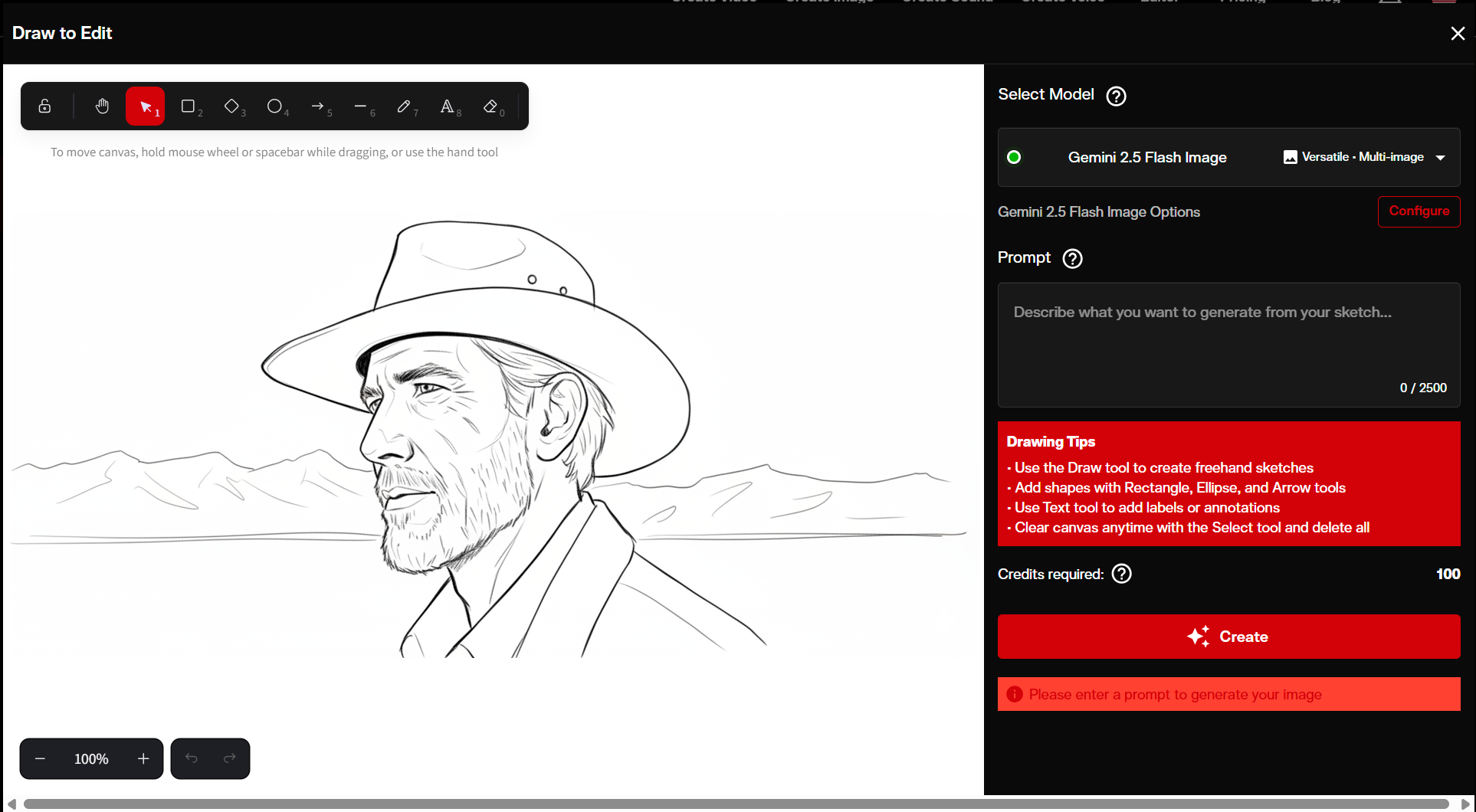

Stage 2: Image Generation for Plates and Inserts

When video generation fails to produce required shots, generate still images and apply motion techniques. This hybrid approach maintains visual quality while working around generation limitations.

Use image generation for environment plates showing locations without characters, prop closeups and detail inserts, graphic elements and title cards, matte paintings for backgrounds, and reference sheets for character consistency.

Apply 2.5D parallax motion to stills using depth estimation and camera projection. This technique creates subtle movement suitable for establishing shots and transitions.

For character moments requiring specific expressions or poses, generate image sequences that show progression from one state to another. Feed these sequences into image-to-video models to create smooth motion between key poses.

Stage 3: Voice Synthesis and Audio Design

Generate voice content for narration, dialogue, and temporary scratch tracks. Voice synthesis enables rapid iteration on script pacing and emotional tone before committing to final delivery.

Select voice models based on character age and gender, emotional range required, accent and speech patterns, and delivery style from conversational to dramatic.

Generate dialogue in short segments rather than complete takes. This segmented approach enables precise control over pacing, emotion, and breath placement.

Layer sound effects to establish spatial presence and environmental realism. Generate ambient soundscapes for location presence, specific action effects synchronized to visual events, and background activity.

Keep text transcripts of all dialogue for accessibility, future re-dubs, and localization.

Stage 4: Upscaling and Technical Conforming

Apply video super resolution selectively to final locked shots only. Upscaling temporary content wastes processing time.

Choose upscaling factors based on delivery requirements and original generation resolution. Current AI video models typically output at 720p or 1080p. Upscale 2x for HD delivery or 4x for 4K when original resolution supports it.

Conform all clips to consistent frame rate and aspect ratio before assembly. Color grade consistently across all shots before beginning editorial assembly.

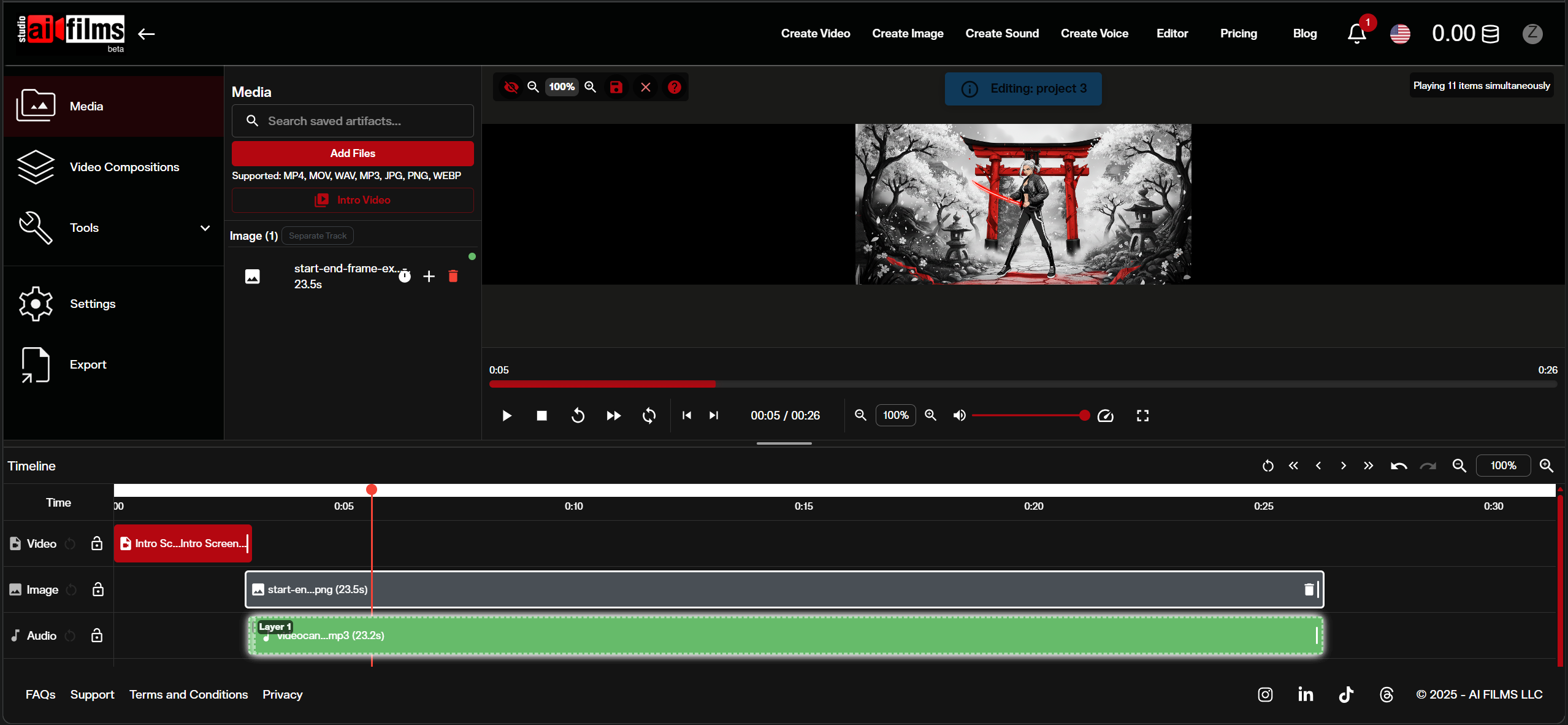

Stage 5: Timeline Assembly and Final Output

Import conformed media into a standard non linear editing system. AI generated content integrates with traditional editorial workflows using the same tools and techniques.

Begin with a rough assembly that places shots in narrative sequence. Refine timing through trim adjustments that establish pacing and rhythm.

Execute color correction for technical consistency followed by color grading for creative style. Mix audio in layers including dialogue, sound effects, ambient soundscapes, and music.

Export final output in delivery format appropriate to distribution platform.

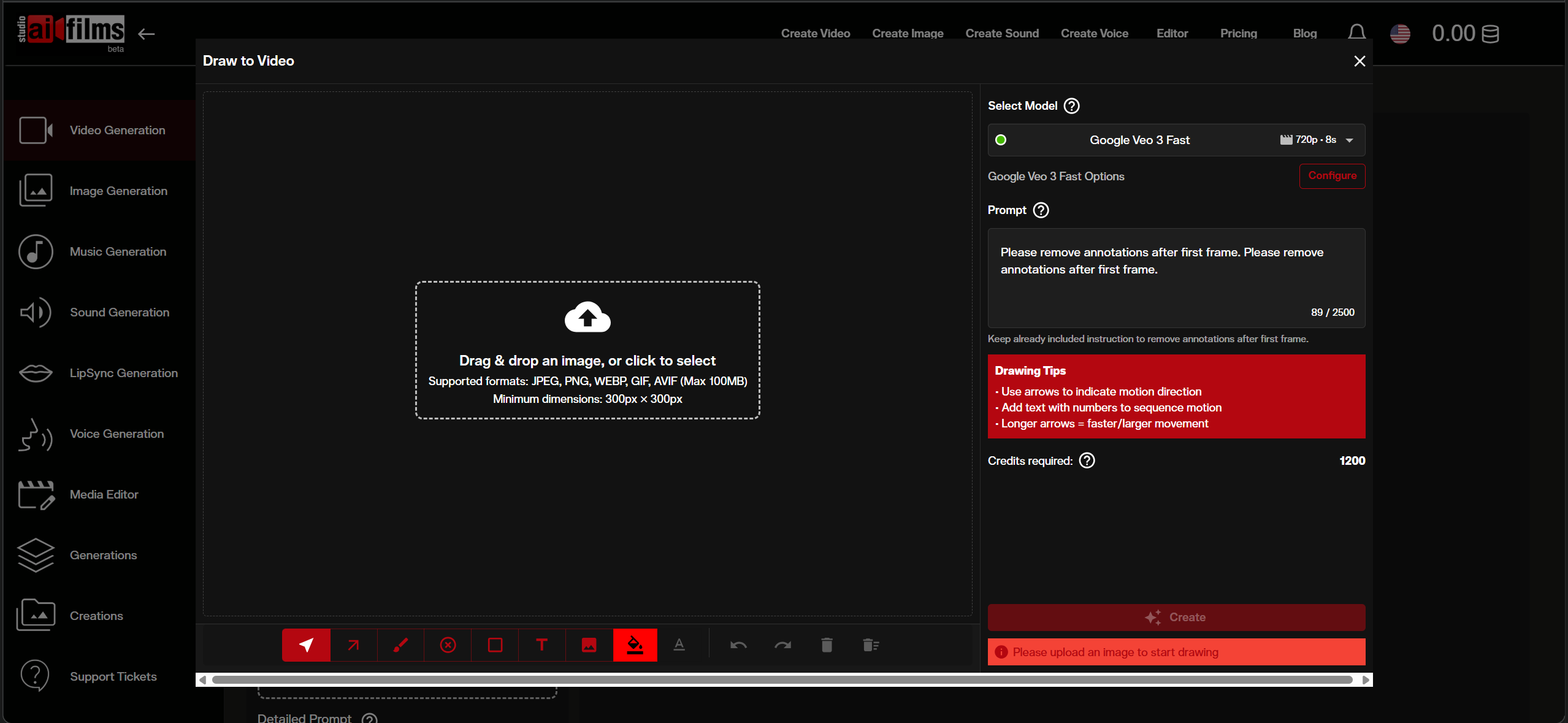

Integrated Production Platform: AI FILMS Studio

AI FILMS Studio consolidates the complete workflow from generation through final assembly in a single platform. The system removes context switching between separate tools while maintaining standard editing conventions.

Multi Modal Generation Tools

The platform provides access to leading AI models for each production task:

Text-to-Video Generation (Prompt to Video): Create videos from text prompts using Google Veo 3, Kling AI v2.1, and Luma's Dream Machine. Each model offers different strengths in motion quality, photorealism, and stylistic control.

Image-to-Video Animation (Image to Video): Transform static images into dynamic video clips. Upload concept art, storyboard frames, or reference images and apply motion that maintains the original aesthetic.

Text-to-Image Generation (Prompt to Image): Generate concept art, character designs, and environment plates using FLUX.1 and Midjourney v7. These models produce high-resolution output suitable for large format display or further video generation.

Image-to-Image Transformation (Image to Image): Apply style transfers, modify compositions, or reimagine existing visuals while maintaining core elements.

Text-to-Speech Synthesis (Prompt to Speech): Convert scripts to natural voiceover using ElevenLabs technology. The system provides emotional range control and multiple voice options.

Specialized AI Tools: Access Image Background Remover, Prompt Segmentation, Video Upscaler (via Topaz Video AI), and Image Upscaler for technical enhancement of generated content.

Additional Models Available

The platform includes access to Hailuo AI for video generation, Google's Flash 2.5 (NanoBanana) for rapid prototyping, and ByteDance Seed technologies (SeedDream, SeedEdit) for specialized workflows.

Media Editor Timeline

The integrated Media Editor supports standard track based organization familiar from professional NLEs. Video and audio tracks provide layering flexibility for complex edits.

Trim tools with ripple, roll, and slip editing enable precise timing adjustments. Keyboard shortcuts mirror industry standard conventions.

Transition effects include cuts, dissolves, and generated transition elements. Color correction and grading tools provide both technical and creative color control.

Audio mixing supports multiple tracks with level automation, EQ, and effects processing. Export to common delivery formats with preset configurations for major platforms.

Workflow Efficiency Benefits

Generating and editing in the same environment reduces file management overhead. Assets remain available in the project without import/export cycles.

Iterative generation benefits from immediate context evaluation. Review new generations alongside existing shots to assess continuity before replacing timeline content.

The single timeline maintains master source of truth for the edit. Changes propagate consistently without versioning confusion.

Current AI Video Models: Capabilities and Selection

Understanding the strengths and limitations of available video generation models helps optimize tool selection for specific shots and scenarios.

Google Veo 3 and Veo 3.1

Google Veo 3, released at Google I/O on May 20, 2025, produces photorealistic output with natural motion and controllable camera movements. Veo 3.1, released October 15, 2025, adds enhanced dialogue support, ambient sound integration, and improved cinematic presets.

Veo 3.1 is available through paid preview via the Gemini API and accessible in Google's Flow platform. Access varies by region and subscription tier.

All videos generated with Veo models include an invisible SynthID digital watermark embedded in the content for provenance tracking. Videos generated in the Gemini app also include a visible watermark. These watermarks help identify AI generated content.

Best for cinematic narrative content requiring photorealism, outdoor environments with natural lighting, character performances emphasizing subtle emotion, and establishing shots showing detailed environments.

Limitations include generation length typically capped at 8 seconds in consumer applications, with extend features available to create longer sequences. Veo 3.1 generates at resolutions up to 1080p.

Kling AI v2.1

Kling AI v2.1 demonstrates strong motion consistency and handles complex camera movements effectively. The model maintains spatial coherence across dynamic shots and produces stable output with minimal flickering.

Best for action sequences with camera movement, shots requiring specific camera techniques like dolly or crane movements, scenes with multiple moving elements, and content requiring consistent motion flow.

Luma Dream Machine

Dream Machine excels at stylized content and experimental visuals. The model produces creative interpretations of prompts and handles abstract concepts effectively.

Best for motion graphics and title sequences, experimental or artistic content, concept visualization emphasizing mood over photorealism, and transitions between scenes requiring creative effects.

Model Selection Strategy

Choose models based on specific shot requirements rather than using a single model for entire projects. Test the same prompt across multiple models and compare results.

Document which models produce best results for recurring shot types. Build a reference library showing model strengths for your specific content style.

Image Generation for Filmmaking Assets

Image generation supports the video production workflow by creating assets that inform or complement video content.

FLUX.1 for Technical Assets

FLUX.1 produces photorealistic images with accurate lighting and material properties. Use FLUX.1 for character reference sheets, environment concept art, prop designs, and technical visualizations.

Midjourney v7 for Stylized Content

Midjourney v7 excels at stylized imagery with strong aesthetic sensibility. Use Midjourney v7 for poster designs and key art, stylized character designs, environment mood boards, and abstract concept visualization.

Image to Image for Iterative Refinement

Transform generated images to adjust composition, style, or specific elements while maintaining core structure. Upload initial generation, specify modifications in text prompt, and generate variations.

Voice Synthesis and Audio Production

Audio quality significantly impacts perceived production value. AI voice synthesis enables rapid iteration on dialogue and narration.

ElevenLabs Voice Generation

ElevenLabs provides natural sounding voice synthesis with emotional control and consistent quality. Generate scratch dialogue for animatics, produce narration for educational content, create placeholder audio for timing decisions, and test different voice approaches.

Audio Layering for Production Value

Combine generated dialogue with sound effects and ambient soundscapes. Base layer of ambient sound establishes location presence. Mid layer of sound effects synchronizes to visual action. Foreground layer of dialogue maintains intelligibility.

Video Enhancement and Upscaling

Enhancement tools improve technical quality of generated content, addressing resolution limitations and temporal artifacts.

Topaz Video AI Integration

AI FILMS Studio provides video upscaling via integrated Topaz workflows accessible through the AI Video Upscaler tool. Upscale 720p or 1080p generations to 4K for high quality delivery. Apply temporal stabilization to reduce flickering.

Process only final locked shots to avoid wasting time upscaling content that may be replaced during editing.

Background Removal and Compositing

Remove backgrounds from generated footage to isolate subjects for compositing. Generate subjects against neutral backgrounds for easier removal, then composite onto different environment plates.

Realistic Cost and Time Benchmarks

Production planning requires understanding time and cost requirements for each workflow stage. These benchmarks reflect typical performance as of November 11, 2025.

Generation Time and Iteration

Text-to-video generation for 5-10 second clips requires 10-30 minutes per shot including 3-6 attempts to achieve acceptable results. Complex motion, specific character features, or continuity requirements increase attempt count.

Image-to-video for inserts processes faster at 5-15 minutes per shot with 1-3 attempts typical.

Voice synthesis for 30-60 second segments completes in 5-10 minutes when testing 2-3 voice options.

Sound effect generation adds 10-20 minutes per scene when combining library sources with custom generation.

Video upscaling processes 10-25 minutes per clip depending on source resolution, target resolution, and upscaling model speed.

Cost Structure and Budget Planning

Video generation costs vary significantly across platforms and pricing tiers. Track costs per second of generated output, number of attempts included per generation, and monthly subscription versus pay-per-use models.

Budget 3-6x the raw generation cost to account for iteration and failed attempts. A 5 second shot may require generating 15-30 seconds of content across multiple attempts.

Image generation typically costs less per asset than video but accumulates across volume of plates and inserts required.

Voice synthesis remains relatively inexpensive compared to video generation.

Production Timeline Example

A 60 second narrative sequence with 8-10 shots typically requires:

- Planning and Setup: 2-3 hours for scene breakdown, prompt preparation, and reference gathering

- Primary Generation: 3-5 hours for text-to-video generation including iteration

- Secondary Assets: 1-2 hours for image generation and image-to-video inserts

- Audio Production: 1-2 hours for voice generation, sound effects, and ambience

- Enhancement: 2-3 hours for upscaling, color grading, and technical refinement

- Editorial Assembly: 2-4 hours for timeline editing, audio mix, and export

Total: 11-19 hours for complete 60-second sequence from concept through final delivery.

Production Quality Benchmarks

Establish objective quality criteria to evaluate generation results and guide iteration decisions.

Visual Quality Metrics

Prompt Adherence: Measures how accurately the generation matches the specified subject, action, and composition.

Motion Stability: Evaluates temporal coherence and absence of flickering, warping, or generation artifacts.

Character Consistency: Verifies that character features, wardrobe, and proportions match established references and previous shots.

Technical Quality: Includes resolution, compression artifacts, color accuracy, and exposure consistency.

Benchmarking Methodology

Create a standard prompt set containing three distinct scenes representing different production challenges. Include day and night lighting conditions, slow and fast motion requirements, and single-character versus multi-character interactions.

Run the prompt set across at least three different video generation models within the same week.

Log model version, generation settings, seed values, processing time, number of failures, and cost per generation.

Score each result against the visual quality metrics. Calculate average scores per model and identify specific strengths and weaknesses.

Export a 45-60 second comparison reel showing side by side results. Provide downloadable data in CSV format with complete generation parameters and scores.

Update benchmarks quarterly to track model improvements and identify shifting capabilities.

Model Comparison Table

| Model | Best For | Max Duration | Audio | Access as of Nov 2025 | Notes |

|---|---|---|---|---|---|

| Google Veo 3.1 | Cinematic realism, character performance | 8s base (extendable) | Integrated dialogue, SFX, ambience | Gemini API paid preview, Flow platform | Includes SynthID watermark |

| Kling AI v2.1 | Complex camera movement, action | ~10s | Separate workflow | Third party endpoints (Replicate, fal.ai) | Strong motion consistency |

| Luma Dream Machine | Stylized content, experimental | ~5s | Separate workflow | Public access | Creative interpretations |

| Hailuo AI | Rapid prototyping | Varies | Limited | Platform specific | Available in AI FILMS Studio |

Table reflects capabilities as of November 11, 2025. Always verify current specifications.

Legal Considerations and Rights Management

AI filmmaking introduces specific legal questions around content ownership, licensing, and disclosure. Address these issues during planning rather than after production completion.

Model Training and Output Rights

Different AI models have different training sources and corresponding usage rights. Review each model's terms of service to understand output licensing.

Document the models used for each asset in the production. Maintain a dated matrix showing which models generated which shots and what their license terms were at generation time.

Likeness and Voice Rights

Using real people's likenesses or voices requires explicit consent regardless of the generation method. Obtain signed releases that specify how the AI generated content will be used, where it will be distributed, and for what duration.

For synthetic characters created entirely through AI without reference to real individuals, document the generation process and retain evidence that no real likeness was used as reference.

Watermarking and Provenance

Some AI models embed visible or invisible watermarks in generated output. Google's Veo models include SynthID digital watermarks in all generated videos. Videos created through the Gemini app include both visible and invisible watermarks.

Content authentication systems like C2PA enable provenance tracking showing how content was created. Maintain detailed production records including generation logs, model versions, prompts, and settings.

Festival and Distribution Requirements

Film festival eligibility rules continue evolving regarding AI generated content. Some festivals require disclosure of AI usage. Research specific festival requirements before submission.

Broadcast and streaming platforms have varying policies on AI generated content. Verify requirements before final delivery.

AI FILMS Studio: Implementation Details

AI FILMS Studio implements the complete workflow described in this guide within a unified platform.

Getting Started

Visit AI FILMS Studio to create an account. The platform offers multiple pricing plans with different usage limits and features.

Plan details and current pricing are available on the Pricing page. All plans except the Basic Plan are eligible for referral discounts.

Referral Program for Creators

AI FILMS Studio operates a referral program benefiting both new users and creators who share the platform.

How it works:

- Choose your plan (except Basic Plan)

- Enter a creator's @username (referral code) at checkout

- Receive an exclusive discount automatically

- The creator earns credits to continue creating content

New users entering a creator's @username at checkout receive a discount on their subscription. Creators receive credits when referred users subscribe.

The program aligns incentives between the platform, creators, and their audiences. Creators benefit from sharing tools that improve their work. Audiences benefit from discounts through trusted recommendations.

To participate, creators simply share their @username with their community. The system tracks referrals and automatically applies credits and discounts.

Platform Tutorials and Documentation

The platform provides tutorials and documentation covering prompt engineering, workflow optimization, and technical specifications for each model. These resources accelerate learning curve and improve generation success rates.

Implementation Checklist

Execute the following steps to apply this guide effectively and maximize results from AI filmmaking workflows.

Pre-Production Planning

- Create scene breakdown with character details, environment specifications, and shot list

- Use the prompt schema provided to structure generation requests consistently

- Establish look bible with visual references, color palettes, and technical specifications

- Document legal requirements including rights clearances and distribution platform policies

Production Execution

- Generate core shots using text-to-video models

- Document each attempt with prompts, settings, and evaluation notes

- Create supplementary assets using image generation and image-to-video

- Produce audio including dialogue, sound effects, and ambience

Post-Production Assembly

- Import conformed media into timeline editor

- Verify all clips match target frame rate and aspect ratio specifications

- Execute rough cut to verify coverage completeness

- Refine timing, add transitions, apply color grading, and mix audio

Quality Assurance

- Review final output on target display devices

- Verify color accuracy, audio levels, and technical specifications

- Confirm legal documentation is complete

- Archive project files including generation logs and raw assets

Frequently Asked Questions

What is AI filmmaking?

AI filmmaking applies machine learning models to production workflows including shot generation, voice synthesis, sound design, and editorial assembly. The approach uses text-to-video generation for primary coverage, image-to-video for specialized shots, voice synthesis for dialogue and narration, and AI assisted editing. Current models can generate 5-10 second clips with photorealistic quality suitable for professional content.

Can I use AI generated content commercially?

Commercial usage depends on each model's license terms and your rights to any likenesses or voices used. Review terms of service for all models used in production. Obtain explicit consent for any real person's likeness or voice regardless of generation method. Maintain a dated licensing matrix documenting which models generated which assets and what their usage terms were at generation time.

How much does producing a 60 second AI film cost?

Costs vary significantly based on model selection, iteration requirements, and post-production processing. Text-to-video generation typically requires 3-6 attempts per shot, multiplying base generation costs. Budget for generation attempts, voice synthesis, sound effects, upscaling, and editing time. Plan for integrated platform subscriptions or per-generation pricing depending on workflow. Expect $50-200 per finished minute depending on complexity and required iteration.

Where should I assemble AI generated shots?

Use a standard timeline editor supporting multitrack organization, trim tools, color correction, and audio mixing. AI FILMS Studio provides integrated generation and editing in a single platform, eliminating context switching between tools. The Media Editor maintains standard editing conventions while keeping generated assets immediately available without file management overhead.

How do referral discounts work at AI FILMS Studio?

Enter a creator's @username during checkout for eligible subscription plans (all plans except Basic Plan). The system automatically applies your discount and credits the creator's account. Creators receive credits funding continued content production. New users benefit from reduced costs through trusted recommendations. Visit the Pricing page for current plan details.

How long does generating a complete scene take?

Complete scene generation including iteration typically requires 2-4 hours for a 30-60 second sequence with 5-8 shots. This includes planning time, multiple generation attempts per shot, voice and audio generation, and initial assembly. Complex scenes requiring specific continuity or extensive iteration may require 6-8 hours. Post-production including upscaling, color grading, and final mix adds 1-3 hours depending on complexity.

What are current limitations of AI video generation?

Current models struggle with rapid camera movements, complex multi person choreography, precise object interactions requiring physical accuracy, maintaining specific character features across multiple shots, and generating content longer than 10-15 seconds in single passes. Models work best for moderate paced camera movement, natural human gestures and expressions, environment establishing shots, and character focused emotional moments.

Do I need technical skills to start AI filmmaking?

Basic familiarity with video concepts including shot types, framing, and basic editing proves helpful but not required. Text prompts use natural language rather than technical syntax. Most platforms provide templates and examples enabling learning through experimentation. AI FILMS Studio consolidates tools in a unified interface reducing technical complexity compared to managing multiple separate applications.

Which video model should I use for specific shots?

Choose models based on shot requirements. Veo 3.1 excels at photorealistic narrative content and character performances. Kling AI v2.1 handles action sequences with complex camera movements. Dream Machine works well for stylized content and creative transitions. Test the same prompt across multiple models and compare results. Build a reference library showing which models produce best results for your recurring shot types.

Conclusion

AI filmmaking provides viable production workflows for specific content types where traditional methods prove impractical due to budget, timeline, or creative requirements. Success requires systematic workflows with defined quality checkpoints rather than exploratory generation.

The field continues evolving rapidly with new models, improved capabilities, and changing cost structures. Regular testing and continuous evaluation enable informed tool selection and realistic project planning.

AI FILMS Studio consolidates the complete workflow from generation through delivery in a unified platform. The integrated approach reduces technical overhead while maintaining standard editing conventions.

Start creating with AI FILMS Studio, explore the Media Editor for timeline based assembly, or generate your first video using Prompt to Video. Use a creator's @username at checkout for referral discounts supporting both your production and the creator community.

This guide is regularly updated to reflect current capabilities and costs. Last revision: November 11, 2025.