Meta SAM 3: Text-Driven Object Segmentation and Tracking for Video

Share this post:

Meta SAM 3: Text-Driven Object Segmentation and Tracking for Video

Meta released Segment Anything Model 3 (SAM 3) on November 19, 2025, introducing text based prompting to the Segment Anything family. The model enables detection, segmentation, and tracking of objects across images and video using natural language descriptions like "yellow school bus" or "person wearing red shirt" instead of requiring manual clicks or bounding boxes.

SAM 3 achieves 2x performance improvement over existing systems on the new SA-Co (Segment Anything with Concepts) benchmark while maintaining backward compatibility with SAM 2's visual prompting capabilities. The system handles 270,000 unique concepts over 50 times more than existing benchmarks trained on a dataset containing 4 million annotated concepts.

Text Prompts for Object Segmentation

Previous Segment Anything models required visual prompts: users clicked points, drew bounding boxes, or painted masks to identify objects. SAM 3 adds text prompts enabling description based segmentation.

SAM 3 text prompt segmentation across multiple object categories | Source: Meta AI

Type "dog" and SAM 3 segments all dogs in the frame. Specify "yellow school bus" and it identifies only yellow school buses, not other vehicles. The system understands nuanced descriptions that combine attributes: "striped red umbrella," "person in white shirt," or "blue window with curtains."

This capability addresses a fundamental limitation in computer vision: linking language to specific visual elements. Traditional models use fixed label sets they segment common concepts like "person" or "car" but struggle with detailed descriptions.

SAM 3 implements promptable concept segmentation: finding and segmenting all instances of a concept defined by text or exemplar prompts. The model accepts short noun phrases as open-vocabulary prompts, eliminating constraints of predefined categories.

Exhaustive Instance Segmentation

Unlike previous SAM versions that segment single objects per prompt, SAM 3 segments every occurrence of a concept in the scene. Request "dog" and it finds all dogs, not just the one you clicked on. This exhaustive approach matches how people naturally describe what they want: "select all the chairs" rather than clicking each individually.

Exhaustive segmentation of all matching instances in complex scenes | Source: Meta AI

The system handles complex scenes with multiple similar objects, distinguishing between closely related prompts. "Player in white" versus "player in blue" requires understanding both the general category (player) and specific attributes (color). SAM 3's presence token architecture improves discrimination between such fine-grained distinctions.

Video Tracking with Text Prompts

SAM 3 extends text prompting to video, maintaining object identity across frames as objects move, occlude, or temporarily leave the scene. Prompt "person wearing green jacket" and SAM 3 tracks that person throughout the video, even through occlusions or camera cuts.

Video tracking of specified objects across frames | Source: Meta AI

The tracker component inherits SAM 2's transformer encoder-decoder architecture, propagating masklets (object specific spatial-temporal masks) across frames. Temporal disambiguation handles occlusions by matching detections and periodically reprompting the tracker with high confidence masks.

This enables unified detection, segmentation, and multi object tracking through text descriptions alone. No manual annotation of first frames, no clicking through video timelines, just describe what you want tracked.

Image Exemplar Prompts

Beyond text, SAM 3 accepts image exemplars as prompts. Provide an example image of an object and SAM 3 finds visually similar instances. This proves useful for concepts that are difficult to describe in words but easy to show visually.

Image exemplar based segmentation finding visually similar objects | Source: Meta AI

Combine text and exemplar prompts for refined control. Text constrains the general category while exemplars specify visual characteristics. Search for "cat" matching a particular pattern or "car" resembling a specific model.

The system also supports visual prompts from SAM 1 and SAM 2: masks, bounding boxes, and points. This flexibility enables users to choose the prompt type that best suits their task and preference.

Applications for AI Filmmakers

SAM 3's capabilities enable several production workflows relevant to AI powered filmmaking and video content creation.

Automated Rotoscoping

Traditional rotoscoping requires manually tracing subjects frame by frame a time consuming process for visual effects work. SAM 3 automates this by tracking subjects through text prompts. Describe the subject once and extract clean mattes throughout the sequence.

For AI generated video requiring compositing or effects work, SAM 3 provides masks isolating specific elements. Generate background plates separately from foreground subjects then combine using SAM 3 generated mattes.

Object Removal and Replacement

Segment unwanted objects using text prompts, then inpaint or replace them. Rather than manually masking each frame, describe what to remove and SAM 3 handles tracking through the sequence.

This workflow works for both live footage and AI generated content. Remove distracting elements from generated scenes or replace objects with alternatives while maintaining proper occlusion and motion.

Video Dataset Annotation

Training custom AI models requires annotated data. SAM 3 accelerates dataset creation by automating segmentation and tracking. Describe the objects of interest and SAM 3 generates masks across the entire dataset.

The model achieves 75-80% of human performance on the SA-Co benchmark containing 270,000 concepts. For many annotation tasks, SAM 3's accuracy eliminates the need for manual frame by frame labeling.

Effect Isolation

Apply effects to specific objects without affecting the rest of the scene. Segment "person" and apply color grading, motion blur, or stylization only to that subject. The mask updates automatically as the person moves through the frame.

Meta demonstrates this in their Edits app where creators apply effects like spotlighting or motion trails to specific subjects within videos—tasks previously requiring complex masking in professional editing software.

The SA-Co Benchmark and Training Dataset

Meta released the Segment Anything with Concepts (SA-Co) benchmark alongside SAM 3. The benchmark evaluates promptable concept segmentation across images and video with 214,000 unique concepts across 126,000 images and videos.

SA-Co provides over 50x more concepts than existing benchmarks like COCO or LVIS. It includes three evaluation sets: SA-Co/Gold with 7 domains and triple annotation, SA-Co/Silver with larger scale, and SA-Co/VEval for video evaluation with 52,500 videos containing 467,000 masklets.

The training dataset contains over 4 million unique concepts—the largest high quality open vocabulary segmentation dataset created. Building this dataset required a novel data engine combining human annotators with AI systems.

Hybrid Human AI Data Engine

Exhaustively masking every object occurrence across millions of images and videos with text labels proves prohibitively expensive using traditional annotation methods. Meta developed a scalable data engine that dramatically accelerates the process.

AI models including SAM 3 and Llama based captioning systems automatically mine images and videos, generate captions, parse captions into text labels, and create initial segmentation masks. These appear as candidates for verification.

AI verifiers based on Llama 3.2v models specifically trained for annotation tasks verify whether masks are high quality and whether all instances of a concept are exhaustively masked. These AI verifiers match or surpass human accuracy on annotation tasks while processing at much higher speed.

Human annotators then verify and correct proposals, creating a feedback loop that rapidly scales dataset coverage while continuously improving quality. By delegating routine verification to AI annotators, the system more than doubles throughput compared to human only pipelines.

AI annotators automatically filter out easy examples, focusing valuable human annotation effort on challenging cases where the current SAM 3 version fails. This active learning approach ensures human expertise concentrates on maximum impact improvements.

The data engine achieves 5x speedup on negative prompts (concepts not present in the image) and 36% faster annotation for positive prompts even in challenging fine grained domains.

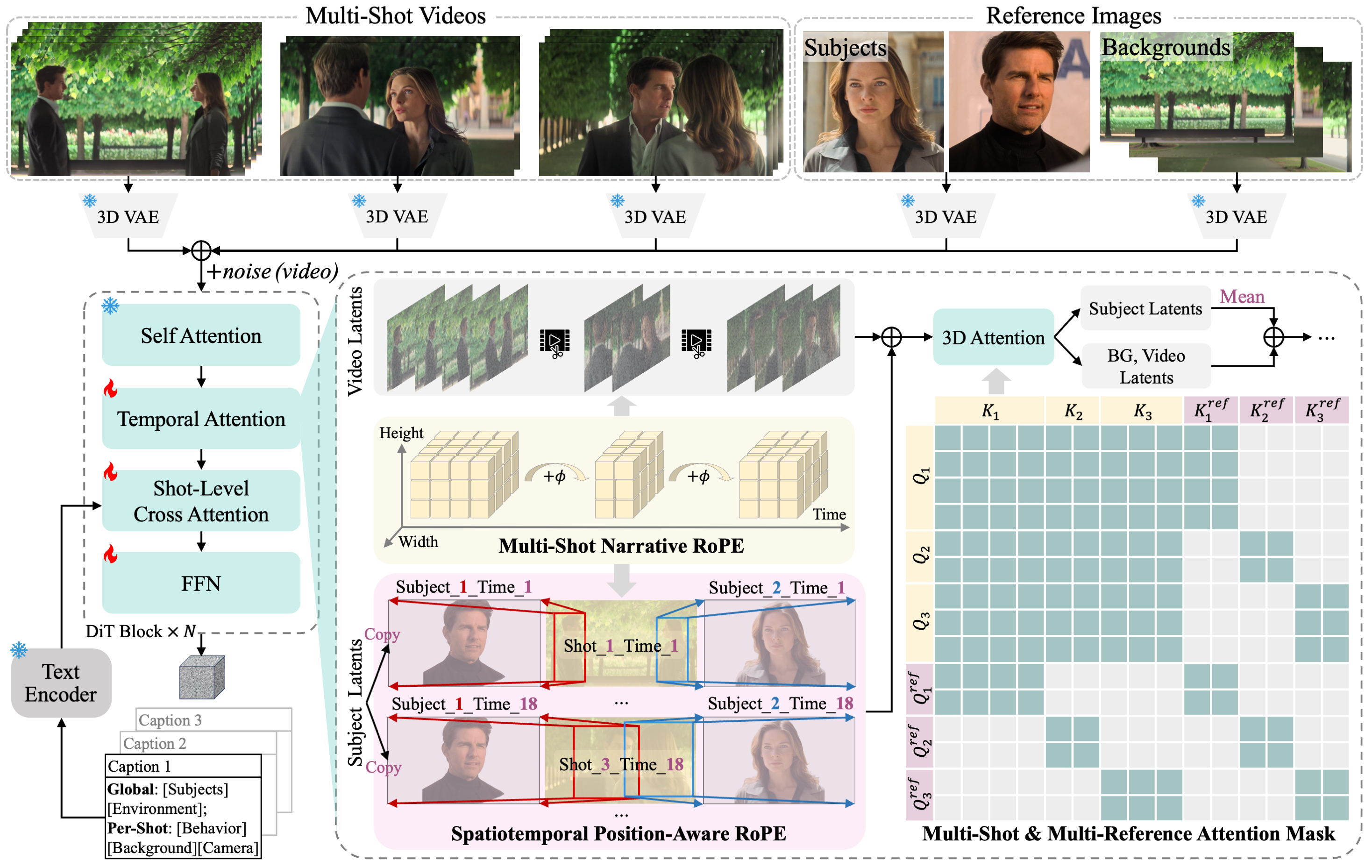

Model Architecture

SAM 3 consists of a detector and tracker that share a vision encoder. The complete model contains 848 million parameters.

The detector uses a DETR-based architecture conditioned on text, geometry, and image exemplars. Text and image encoders come from Meta's Perception Encoder released in April 2025. An opensource model enabling advanced computer vision systems.

Using the Perception Encoder provided significant performance gains over previous encoder choices. The detector handles text prompts, image exemplars, and visual prompts like points, boxes, and masks.

The presence token architecture improves discrimination between closely related text prompts. When multiple similar concepts appear in a scene, the presence token helps the model distinguish between "player in white" versus "player in blue" rather than confusing similar entities.

The tracker inherits SAM 2's transformer encoder-decoder architecture with memory bank and memory encoder enabling video segmentation and interactive refinement. This combination allows SAM 3 to detect new instances and maintain consistent object IDs through time.

Performance and Speed

SAM 3 runs in 30 milliseconds for a single image with over 100 detected objects on an H200 GPU. For video, inference latency scales with the number of objects, sustaining near realtime performance for approximately five concurrent objects.

On the SA-Co benchmark, SAM 3 doubles cgF1 scores (measuring how well the model recognizes and localizes concepts) compared to existing models. The system consistently outperforms both foundational models like Gemini 2.5 Pro and strong specialist baselines.

In user studies, people prefer SAM 3 outputs over the strongest baseline (OWLv2) approximately three to one. SAM 3 also achieves SOTA results on SAM 2 visual segmentation tasks, matching or exceeding previous model performance.

On challenging benchmarks like zero shot LVIS and CountBench (object counting), SAM 3 shows notable gains over previous approaches. The model demonstrates particular strength on nuanced queries requiring understanding of specific attributes beyond general categories.

SAM 3 Agent for Complex Queries

SAM 3 can function as a tool for multimodal large language models, enabling segmentation of complex text queries beyond simple noun phrases. SAM 3 Agent uses an MLLM that proposes noun phrase queries to prompt SAM 3, analyzes returned masks, and iterates until masks are satisfactory.

Example complex query: "What object in the picture is used for controlling and guiding a horse?" The MLLM breaks this down into simpler noun phrases, prompts SAM 3, evaluates results, and continues iterating.

Without training on referring expression segmentation or reasoning segmentation data, SAM 3 Agent surpasses prior work on challenging free text segmentation benchmarks requiring reasoning like ReasonSeg and OmniLabel.

This agent approach extends SAM 3's capabilities beyond direct text prompts to handle questions, descriptions, and reasoning based queries by combining SAM 3's segmentation with an MLLM's language understanding.

Segment Anything Playground

Meta launched the Segment Anything Playground, a web platform enabling anyone to experiment with SAM 3 without technical expertise. Users upload images or videos and test segmentation capabilities through a simple interface.

The playground includes templates for practical applications: pixelating faces, license plates, or screens for privacy; adding effects like spotlights or motion trails to specific objects; and annotating visual data for research or development.

This accessibility contrasts with SAM 2's primarily research focused release. Meta positions SAM 3 for broader adoption through consumer applications and easy experimentation platforms.

The playground features recordings from Meta's Aria Gen 2 research glasses, demonstrating SAM 3's capabilities on first person wearable footage. This enables robust segmentation from egocentric perspectives—relevant for applications in machine perception, contextual AI, and robotics.

Integration in Meta Products

Meta immediately deployed SAM 3 into consumer applications alongside the research release. Facebook Marketplace uses SAM 3 and SAM 3D for the new "View in Room" feature, helping people visualize furniture and home decor in their spaces before purchasing.

Instagram's Edits app integrates SAM 3 for object specific video effects. Creators apply modifications like color grading or motion effects to specific subjects with a single tap—workflows that previously required professional editing software and manual masking.

The Vibes feature within Meta AI uses SAM 3 to enable remixing of AI generated videos. Users can isolate and modify specific elements while maintaining the rest of the scene.

This rapid deployment from research release to production features marks a shift from previous SAM versions that remained primarily within the research domain.

Current Limitations

SAM 3 performs best with short noun phrases reflecting common user intent. The model can segment objects described by simple text but may struggle with very long or complex descriptions without the SAM 3 Agent wrapper.

Video processing cost scales linearly with the number of objects tracked. Each object processes separately using shared per frame embeddings without inter object communication. Complex scenes with many visually similar objects may benefit from incorporating shared contextual information.

The model focuses on object level segmentation. Scene level understanding, relationships between objects, or high level reasoning about scene context fall outside SAM 3's core capabilities, though the SAM 3 Agent approach addresses some of these limitations.

Training data covers diverse domains but may show performance variations across specialized visual domains or extremely rare concepts outside the 4 million concept training set.

Open Source Release

Meta released SAM 3 as open source through GitHub with model weights, inference code, training code, and example notebooks. The SA-Co benchmark datasets are publicly available through HuggingFace and Roboflow.

The research paper detailing SAM 3's architecture, training methodology, and evaluation results is available through OpenReview as a submission to ICLR 2026.

Open source release enables integration into custom pipelines, finetuning for specialized domains, and research extensions building on SAM 3's capabilities. The permissive licensing follows Meta's approach with previous Segment Anything releases.

Community integrations already emerged including ComfyUI nodes for SAM 3, enabling text prompt segmentation within popular AI art generation workflows.

Scientific Applications

SAM 3 is already being applied in scientific research. Meta collaborated with Conservation X Labs and Osa Conservation to build the SA-FARI dataset: over 10,000 camera trap videos of more than 100 species with bounding boxes and segmentation masks for every animal in each frame.

FathomNet, led by MBARI, uses SAM 3 for ocean exploration and marine research. Segmentation masks and an instance segmentation benchmark tailored for underwater imagery are now available to the marine research community.

These datasets demonstrate SAM 3's utility beyond consumer applications, enabling wildlife monitoring, conservation efforts, and marine biology research through automated analysis of video footage.

Implementation Considerations

Filmmakers and content creators can access SAM 3 through multiple paths depending on technical requirements and workflow preferences.

The Segment Anything Playground provides immediate no code access for testing capabilities and experimenting with segmentation on uploaded media. This serves exploration, concept testing, and lightweight production use.

For production integration, the GitHub repository provides Python code for local deployment. This enables custom workflows, batch processing, and integration into existing pipelines. Requirements include Python 3.11+, PyTorch, and appropriate GPU hardware for acceptable performance.

API access through Meta's platforms may become available for cloud based integration without managing local infrastructure. Current implementation requires local deployment of the opensource release.

Performance scales with available GPU hardware. The 30 ms image processing speed requires H200-class GPUs. Consumer GPUs process more slowly but remain usable for non realtime applications.

Looking Forward

SAM 3 represents continued progress in unified vision models that understand both images and language. The combination of text prompting, exhaustive instance segmentation, and video tracking creates capabilities relevant across computer vision applications.

For AI filmmaking specifically, SAM 3 enables more efficient video editing workflows by automating tedious masking and tracking tasks. As the technology integrates into accessible platforms and editing tools, these capabilities may become standard features rather than specialized techniques.

The opensource release and accessible playground lower barriers for experimentation and adoption. Content creators can explore SAM 3's utility for their specific workflows without significant investment in implementation.

The field continues evolving rapidly. SAM 3's release less than four months after SAM 2 demonstrates the pace of development. Future iterations will likely address current limitations around multi object efficiency and scene level understanding.

Explore AI video generation and editing tools at AI FILMS Studio, where emerging capabilities like advanced segmentation and tracking may integrate into accessible production workflows as the technology matures.

Resources:

- Meta AI Blog: https://ai.meta.com/blog/segment-anything-model-3/

- Research Paper (OpenReview): https://openreview.net/forum?id=r35clVtGzw

- GitHub Repository: https://github.com/facebookresearch/sam3

- Segment Anything Playground: https://www.aidemos.meta.com/segment-anything

- SA-Co Dataset: https://github.com/facebookresearch/sam3/blob/main/README.md#sa-co-dataset