Tencent HunyuanVideo 1.5: 8.3B Parameters Run on Consumer GPUs

Share this post:

Tencent HunyuanVideo 1.5: 8.3B Parameters Run on Consumer GPUs

Tencent released HunyuanVideo 1.5 on November 20, 2025. The model achieves SOTA visual quality with 8.3 billion parameters, enabling inference on consumer grade GPUs with 14GB VRAM.

Technical Architecture

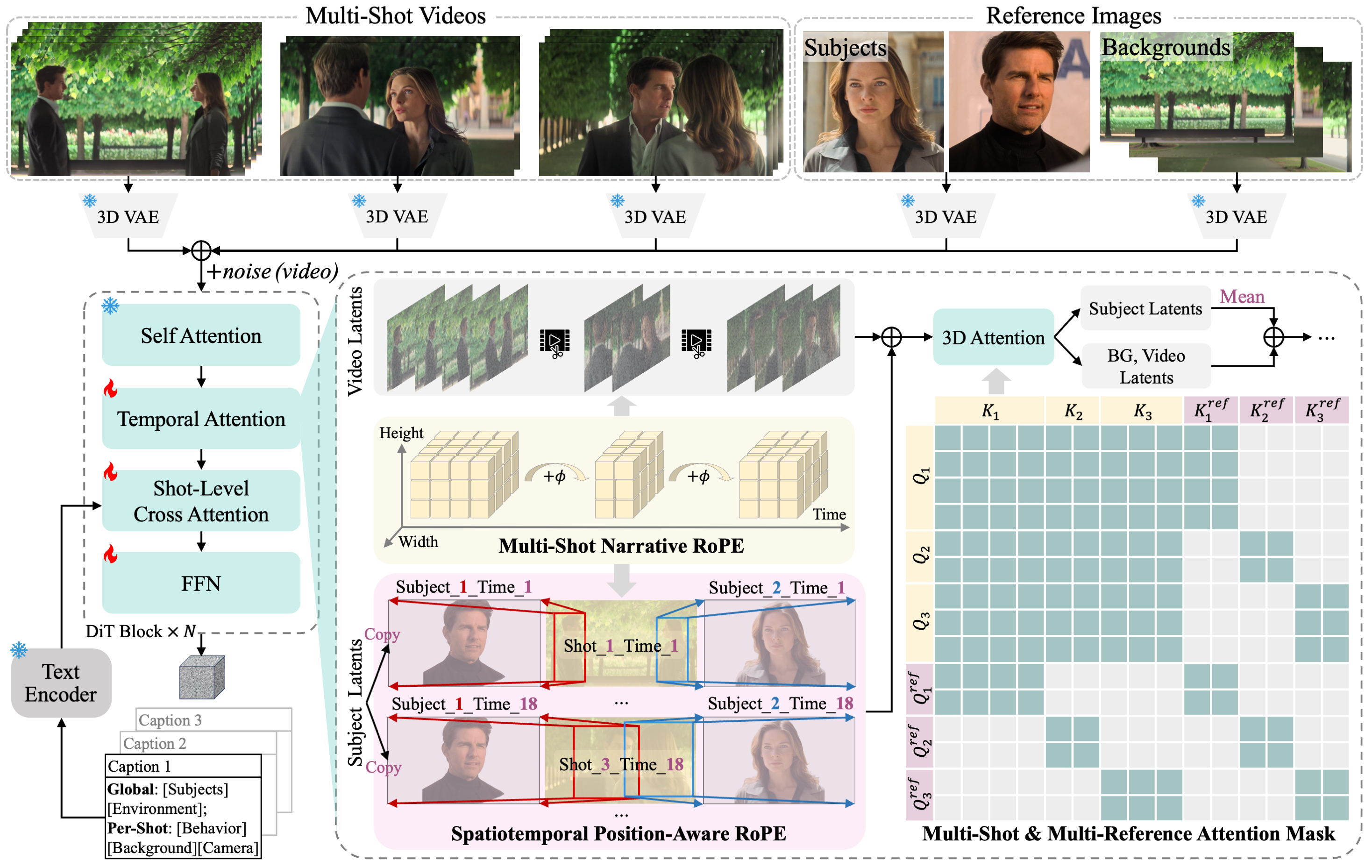

The system integrates an 8.3 -parameter Diffusion Transformer with a 3D causal VAE. Compression ratios reach 16× in spatial dimensions and 4× along the temporal axis.

Selective and Sliding Tile Attention (SSTA) prunes redundant spatiotemporal key/value blocks. This reduces computational overhead for long sequences, achieving 1.87× speedup for 10 second 720p synthesis compared to FlashAttention-3.

Cinematic film grain aesthetics | Tencent HunyuanVideo

Smooth motion generation | Tencent HunyuanVideo

The model uses a dual stream to single stream hybrid design. Video and text tokens process independently through initial Transformer blocks, allowing each modality to learn appropriate modulation mechanisms. Later blocks concatenate tokens for multimodal fusion.

Super resolution network upscales outputs to 1080p through a few step process. The network enhances sharpness while correcting distortions, refining details and visual texture.

Training Strategy

Multi stage progressive training covers pre-training through post-training. The Muon optimizer accelerates convergence. This approach refines motion coherence, aesthetic quality, and human preference alignment.

Glyph aware text encoding enhances bilingual understanding. The system handles English and Chinese prompts with equal capability, processing complex scene descriptions and stylistic instructions.

Physics-compliant interactions | Tencent HunyuanVideo

Camera movement simulation | Tencent HunyuanVideo

Data curation emphasizes quality over volume. Training examples span cinematic aesthetics, physics compliance, camera movements, and multi style support.

Hardware Requirements and Performance

Minimum specification: 14GB GPU memory with model offloading enabled

Recommended setup: 80GB GPU for optimal generation quality

Operating system: Linux

Python version: 3.10 or higher

CUDA compatibility: Required for PyTorch installation

Inference speed tests on 8 H800 GPUs demonstrate practical performance for reaL world deployment. The team prioritized speed improvements while maintaining output quality, avoiding extreme acceleration at the cost of generation fidelity.

Lower memory configurations work through CPU offloading. This trades inference speed for accessibility. Disabling offloading on sufficient GPU memory improves performance.

Generation Capabilities

The unified framework handles text-to-video and image-to-video generation across multiple durations and resolutions.

Text-to-video mode generates 5-10 second clips at 480p or 720p from prompt descriptions. Evaluation considers text TO video consistency, visual quality, structural stability, motion effects, and frame aesthetics.

Text rendering within scene | Tencent HunyuanVideo

Multi style support | Tencent HunyuanVideo

Image-to-video mode animates reference images while preserving color, style, and character design. Assessment includes image TO video consistency, instruction responsiveness, visual quality, structural stability, and motion effects.

Demonstrated capabilities:

- Cinematic aesthetics with film grain and lighting control

- Smooth motion generation across complex scenes

- Physics compliance for realistic object interactions

- Camera movement simulation (pans, tilts, tracking shots)

- Multi-style support (claymation, anime, photorealistic)

- Text rendering within generated scenes

- High image-video consistency for animation workflows

Complex scene composition | Tencent HunyuanVideo

Realistic lighting effects | Tencent HunyuanVideo

Prompt Optimization System

The model requires detailed prompts for high quality output. Tencent recommends using prompt enhancement through vLLM compatible language models or Gemini.

Default behavior: Prompt rewriting enabled. Expands short inputs into structured descriptions covering camera angles, subject attributes, lighting, and mood.

Manual control: Users can disable rewriting with --rewrite false flag. This may result in lower quality generation but provides direct prompt control.

Character animation | Tencent HunyuanVideo

Image-to-video consistency | Tencent HunyuanVideo

The system includes built-in prompt optimization templates:

t2v_rewrite_system_promptfor text-to-video refinementi2v_rewrite_system_promptfor image-to-video enhancement

Example transformation: Simple input "A girl holding paper with words" expands to detailed scene description including hand position, paper angle, lighting conditions, and background elements.

Benchmark Performance

Professional human evaluation used 300 diverse text prompts and 300 image samples. Over 100 assessors rated outputs against baseline models.

GSB (Good/Same/Bad) methodology compared overall video perception quality. Single-run inference per prompt ensured fair comparison. No cherry picking of results.

Dynamic camera tracking | Tencent HunyuanVideo

Stylized rendering | Tencent HunyuanVideo

The model establishes new SOTA among open-source systems. Evaluation shows competitive performance with closed source alternatives while maintaining accessibility advantages.

Key strength areas:

- Motion quality scores highest among tested models

- Structural stability across frame sequences

- Text alignment accuracy for prompt adherence

- Visual quality consistent with professional standards

Implementation and Deployment

Direct installation: Clone repository, install dependencies, download model weights from Hugging Face or ModelScope.

Natural environment rendering | Tencent HunyuanVideo

Complex motion sequences | Tencent HunyuanVideo

The open source release enables developers to integrate the model into custom pipelines and production workflows. Community implementations continue expanding deployment options.

For filmmakers exploring AI video generation workflows, try AI FILMS Studio's video generation tools to experiment with text-to-video and image-to-video capabilities across multiple models.

Acceleration Features

CFG distilled models enable 2× speedup through classifier free guidance distillation. Use --cfg_distilled true flag to enable.

Sparse attention provides 1.5-2× speedup on H-series GPUs. Requires flex-block-attn library. Auto enables CFG distillation.

SageAttention offers alternative acceleration approach. Specify block ranges with --sage_blocks_range parameter.

Torch compile optimization available through --enable_torch_compile flag for transformer acceleration.

Multiple techniques can combine for cumulative performance gains, though quality tradeoffs increase with aggressive optimization.

Open Source Availability

Code: GitHub

Weights: Hugging Face

License: Tencent Hunyuan Community License

Documentation: Comprehensive setup and usage guides included

The release includes:

- Complete inference implementation

- Model weights for all resolutions

- Training code for reproduction

- Example prompts and test data

- Integration guides for popular frameworks

Practical Deployment Considerations

The 8.3B parameter count enables deployment scenarios previously requiring data center infrastructure. Independent creators, educational institutions, and research labs can run the model locally.

Cost comparison: Local inference on owned hardware versus API calls to closed source services. For high volume generation, local deployment reduces per clip costs significantly.

Privacy advantages: Processing sensitive content locally avoids third party API exposure. Relevant for client work, proprietary content, and confidential projects.

Iteration speed: Local control enables rapid prompt testing without rate limits or queue times. Benefits experimental workflows and creative exploration.

Customization potential: Open architecture allows finetuning on specific datasets, style adaptation, and integration with custom pipelines.

Current Limitations

The model represents mid tier performance in the 2025 video generation landscape. It achieves solid results without setting new quality records.

Resolution constraints: Native generation at 480p or 720p. Super resolution upscaling to 1080p adds processing time and potential artifacts.

Length limitations: 5-10 second clips standard. Longer sequences require computational resources beyond consumer hardware specifications.

Prompt dependency: Quality relies heavily on detailed, well structured prompts. Short descriptions produce inconsistent results.

Deployment complexity: Linux only support. Windows users require WSL2 with uncertain CUDA compatibility.

Provider limitations: Cloud endpoint (Fal.ai) currently caps at 480p despite model's 1080p capability.

What This Means for Video Production

The release demonstrates efficient architecture can match larger models. Parameter count matters less than design choices around attention mechanisms, compression strategies, and training methods.

Democratization continues: professional grade video generation moves from exclusive cloud services to local workstations. Barrier to entry lowers for small studios, independent creators, and academic research.

Open source advantage: community can audit, modify, and extend the technology. Unlike black-box APIs, users understand system behavior and can optimize for specific use cases.

The model suits backup deployment scenarios. When primary services fail on particular prompts or self-hosting requirements exist, HunyuanVideo 1.5 provides capable alternative.

For cost sensitive projects with moderate quality requirements, the efficiency gains justify adoption. For cutting edge visual fidelity, closed source leaders still maintain advantages.

Sources:

- GitHub Repository: https://github.com/Tencent-Hunyuan/HunyuanVideo-1.5

- Hugging Face Model Card: https://huggingface.co/tencent/HunyuanVideo-1.5

- Tencent Official Website: https://hunyuan.tencent.com/video/en