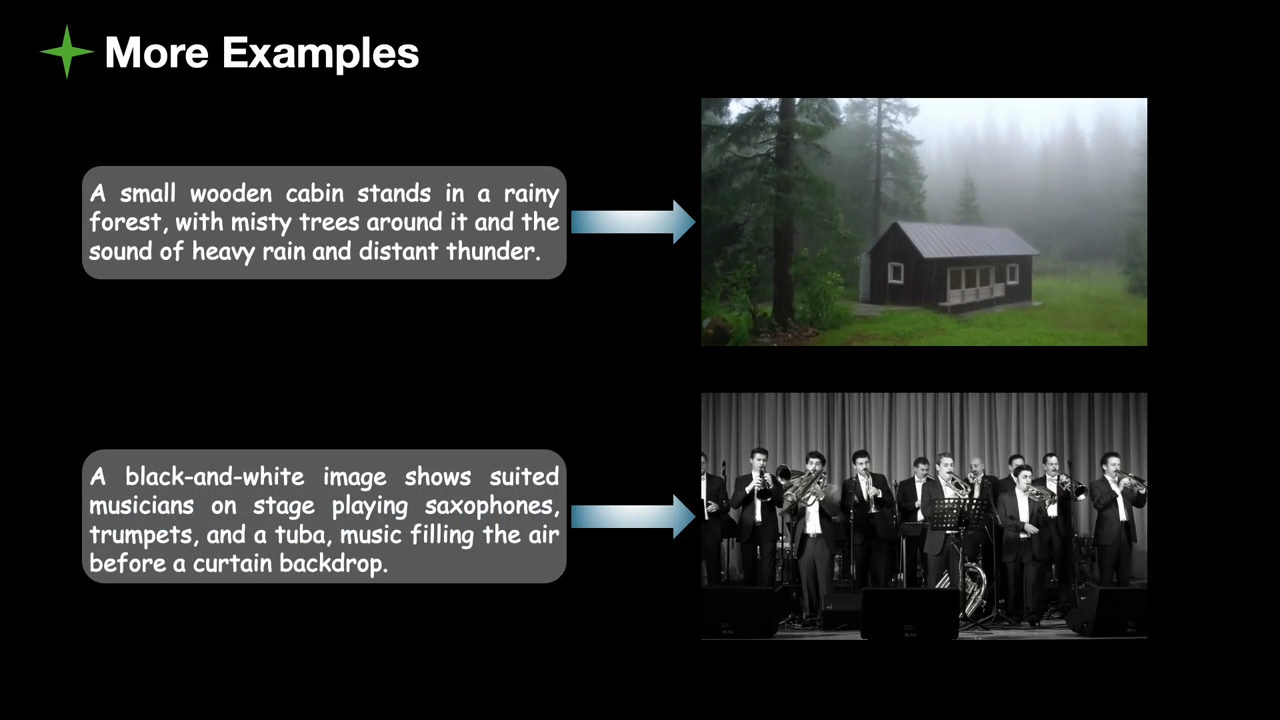

AnyTalker: Scalable Multi Person Talking Video Generation With Identity Aware Attention

Share this post:

AnyTalker: Scalable Multi Person Talking Video Generation With Identity Aware Attention

Hong Kong University of Science and Technology (HKUST) released AnyTalker on November 28, 2025. The audio driven framework generates multi person talking videos with scalable identity handling through novel identity aware attention mechanisms. The technical report published December 1, 2025, on arXiv.

Core Innovation

AnyTalker addresses two fundamental challenges in multi person video generation: high costs of diverse multi person data collection and difficulty driving multiple identities with coherent interactivity.

The framework features flexible multistream processing architecture extending Diffusion Transformer's attention block with identity aware attention mechanism. This iteratively processes identity audio pairs, enabling drivable IDs to scale arbitrarily.

Two-person conversation with synchronized lip movements | HKUST AnyTalker

Interactive dialogue maintaining identity consistency | HKUST AnyTalker

Key specifications:

- 1.3 billion parameter model released (14 billion model coming to Video Rebirth platform)

- 480p inference on single GPU

- 24 FPS output

- Automatic switching between single person and multi person modes

- Apache 2.0 License for models

Training Pipeline Innovation

The training pipeline depends solely on single person videos to learn multi person speaking patterns, then refines interactivity with only a few real multi person clips.

This approach reduces data collection costs compared to methods requiring extensive multi person datasets. The system learns fundamental speaking patterns from abundant single person video data, then applies minimal multi person data for interaction refinement.

Singing performance with audio lip synchronization | HKUST AnyTalker

Three person interaction demonstrating scalability | HKUST AnyTalker

Training approach:

- Stage 1: Train on single person videos exclusively

- Stage 2: Finetune with minimal multi person clips

- Result: Multi person generation capability without massive multi person datasets

Identity Aware Attention Mechanism

The novel attention mechanism processes identity audio pairs iteratively. Each iteration handles one character's identity and audio input, updating the shared representation.

This architecture enables arbitrary scaling of identities. The system handles two person conversations, three person interactions, or larger groups without architectural changes. The same mechanism processes each identity audio pair sequentially.

Traditional multi person generation methods struggle with identity binding. Audio tracks associate incorrectly with characters, causing mismatched lip movements. AnyTalker's identity aware attention resolves this through explicit identity audio pairing during processing.

Complex dialogue scene maintaining natural interactivity | HKUST AnyTalker

Model Architecture

AnyTalker builds on Diffusion Transformer (DiT) architecture with significant extensions:

Base architecture:

- Wan2.1-Fun-V1.1-1.3B-InP foundation model

- Wav2vec2-base-960h for audio processing

- Diffusion-based generation pipeline

Novel components:

- Identity-aware attention blocks replacing standard attention

- Multi-stream processing for parallel identity handling

- Iterative identity-audio pair processing

Audio processing:

- Wav2vec2 extracts audio features from input tracks

- Features encode speech content, timing, intonation

- Guide scale parameter: 4.5 (applied to both text and audio)

Performance Characteristics

Experiments demonstrate excellent lip synchronization, visual quality, and natural interactivity. The system strikes favorable balance between data costs and generation fidelity.

Lip synchronization: Audio visual alignment maintains precision across all characters in multi person scenes. Each character's mouth movements match their corresponding audio track accurately.

Visual quality: Generated videos maintain consistent character appearance, lighting, and scene coherence throughout sequences. No flickering or identity drift occurs.

Natural interactivity: Characters exhibit appropriate non verbal responses during conversations. Turn taking, listening behaviors, and conversational flow appear natural.

Evaluation Methodology

The research contributes targeted metric and dataset designed to evaluate naturalness and interactivity of generated multi person videos.

Interactivity benchmark:

- Custom dataset for multi person evaluation

- Interactivity score measuring conversational naturalness

- Speaker duration tracking for turn taking analysis

- Reference frames for identity consistency verification

The benchmark addresses gap in multi person video evaluation. Previous metrics focused on single-person quality or basic lip sync. The new benchmark assesses multi character interaction quality specifically.

Technical Requirements

System requirements:

- Python 3.10

- PyTorch 2.6.0 with CUDA 12.6

- Flash Attention 2.8.1

- FFmpeg with libx264 support

- Single GPU for 480p inference

Model downloads:

- Wan2.1-Fun-V1.1-1.3B-InP (base model)

- Wav2vec2-base-960h (audio encoder)

- AnyTalker-1.3B (specialized weights)

Models available via Hugging Face with CLI download commands provided in documentation.

Inference Configuration

The system automatically switches between single person and multi person generation modes based on input audio list length.

Key parameters:

offload_model: Offloads model to CPU after forward passes, reducing GPU memory requirements. Enables inference on lower memory GPUs.

det_thresh: Detection threshold for InsightFace model (default 0.15). Lower values improve performance on abstract style images or challenging face detection scenarios.

sample_guide_scale: Guidance scale for generation (recommended 4.5). Applied to both text prompts and audio inputs. Higher values increase adherence to conditioning.

mode: Audio padding strategy. "pad" assumes zero padded common length. "concat" chains speaker clips then zero pads non speaker segments.

use_half: Enables FP16 half precision inference for faster acceleration with minimal quality impact.

Licensing and Commercial Use

Apache 2.0 License covers all models in the repository. This permissive opensource license allows:

- Commercial use without restriction

- Modification and distribution

- Private use

- Patent grant from contributors

User responsibilities: The license grants freedom to use generated content while requiring compliance with provisions. Users remain fully accountable for usage, which must not:

- Share content violating applicable laws

- Cause harm to individuals or groups

- Disseminate personal information intended for harm

- Spread misinformation

- Target vulnerable populations

No rights claimed: HKUST claims no rights over user generated content. Complete ownership and responsibility rest with users.

Code and Model Availability

Released components:

- Complete inference code (November 28, 2025)

- 1.3B parameter model weights (stage 1, trained on single person data)

- Interactivity benchmark dataset and evaluation script

- Technical report on arXiv (December 1, 2025)

- Project page with demonstrations

Coming soon:

- 14B parameter model (planned for Video Rebirth creation platform)

- Additional documentation and examples

Repository structure:

checkpoints/

├── Wan2.1-Fun-V1.1-1.3B-InP

├── wav2vec2-base-960h

└── AnyTalker

All code available on GitHub at HKUST-C4G/AnyTalker. Models downloadable via Hugging Face.

Use Cases and Applications

Content creation:

- Animated conversations for educational content

- Character dialogues for entertainment

- Multi-person interview simulations

- Podcast video visualization

Media production:

- Budget-friendly animation for indie creators

- Rapid prototyping of conversational scenes

- Voice acting visualization

- Multilingual content adaptation

Research applications:

- Multi-person interaction studies

- Audio-visual synchronization research

- Identity-aware generation techniques

- Conversational AI visualization

Accessibility:

- Visual representation for audio content

- Sign language support enhancement

- Educational material animation

- Communication aid development

For filmmakers exploring AI video generation, AI FILMS Studio provides video generation tools to experiment with different models and workflows for motion content creation.

Comparison With Related Work

AnyTalker differentiates from other multi person generation frameworks through training efficiency and scalability.

MultiTalk (separate project from Meituan/HKUST collaboration) addresses similar challenges but requires different training approaches. AnyTalker's single person to multi person pipeline offers efficiency advantages.

Traditional talking head methods focus on single person animation with high quality. Extending these to multi person scenarios typically requires complete retraining with multi person datasets. AnyTalker's architecture natively handles multiple identities. For single portrait workflows that prioritize long form continuous output — dubbed segments, hosted explainers, or localized content — InfiniteTalk generates extended talking avatar sequences with full body and facial animation driven by audio.

Identity binding solutions: Previous methods struggle with correct audio to person association in multi stream inputs. Identity aware attention mechanism explicitly resolves this through structured processing.

Technical Limitations

Resolution constraints: Current release supports 480p inference. While sufficient for many applications, higher resolution generation requires more computational resources.

Single GPU inference: The 1.3B model runs on single GPU. Larger scenes or higher resolutions may benefit from multi GPU support not yet implemented in released code.

Audio preprocessing: System expects audio tracks with specific formatting. Zero padding or concatenation required for proper synchronization across multiple speakers.

Face detection dependency: InsightFace model detects faces in reference images. Abstract or stylized imagery may require threshold adjustment. Extreme stylization might fail detection.

Interaction complexity: While interactivity metrics show strong performance, extremely complex multi party interactions with rapid turn taking may exhibit artifacts.

Research Team

Development led by researchers from:

Hong Kong University of Science and Technology:

- Division of Applied Mathematics and Computation

- Department of Electronic and Computer Engineering

Key contributors:

- Zhizhou Zhong

- Yicheng Ji

- Zhe Kong

- Yiying Liu (Project Leader)

- Wenhan Luo (Corresponding Author)

- Additional contributors from collaborative institutions

The research represents collaboration between academic institutions advancing audio-driven video generation technology.

Future Development

14B model: Larger model variant promises improved quality and capability. Planned integration with Video Rebirth creation platform will make it accessible for commercial applications.

Enhanced features: Future updates may address current limitations in resolution, multi GPU support, and interaction complexity handling.

Community contributions: Opensource release under Apache 2.0 enables community extensions, optimizations, and integration with other tools.

Dataset expansion: Benchmark for interactivity evaluation may expand with community contributions, improving evaluation standards for multi person generation.

Practical Implications

AnyTalker's training efficiency opens multi person video generation to smaller research groups and independent creators. Previous approaches requiring massive multi person datasets limited access to well funded organizations.

The arbitrary identity scaling capability future proofs applications. Systems built on AnyTalker handle two person dialogues or large group conversations without architectural modifications.

Apache 2.0 licensing removes commercial barriers. Developers integrate the technology into products without licensing negotiations or usage restrictions beyond general ethical requirements.

Getting Started

Installation steps:

- Create conda environment (Python 3.10)

- Install PyTorch 2.6.0 with CUDA 12.6 support

- Install requirements and dependencies

- Install Flash Attention 2.8.1

- Configure FFmpeg with libx264 support

Model setup:

- Download base model (Wan2.1-Fun-V1.1-1.3B-InP)

- Download audio encoder (Wav2vec2-base-960h)

- Download AnyTalker weights (1.3B checkpoint)

- Organize checkpoint directory structure

Running inference:

- Prepare reference images for characters

- Prepare audio tracks (one per character)

- Configure input JSON with audio-image pairs

- Execute inference script with desired parameters

- Output videos generated in specified directory

Complete documentation available in GitHub repository with example configurations.

Sources:

- AnyTalker Project Page: https://hkust-c4g.github.io/AnyTalker-homepage/

- GitHub Repository: https://github.com/HKUST-C4G/AnyTalker

- Technical Report (arXiv): https://arxiv.org/abs/2511.23475

- Hugging Face Models: https://huggingface.co/zzz66/AnyTalker-1.3B

- Base Model: https://huggingface.co/alibaba-pai/Wan2.1-Fun-V1.1-1.3B-InP

- Audio Encoder: https://huggingface.co/facebook/wav2vec2-base-960h