DiT360: AI Generated 360 Degree Panoramic Images

360 Panoramic View | Image from DiT360 Research

Share this post:

DiT360: AI Generated 360 Degree Panoramic Images

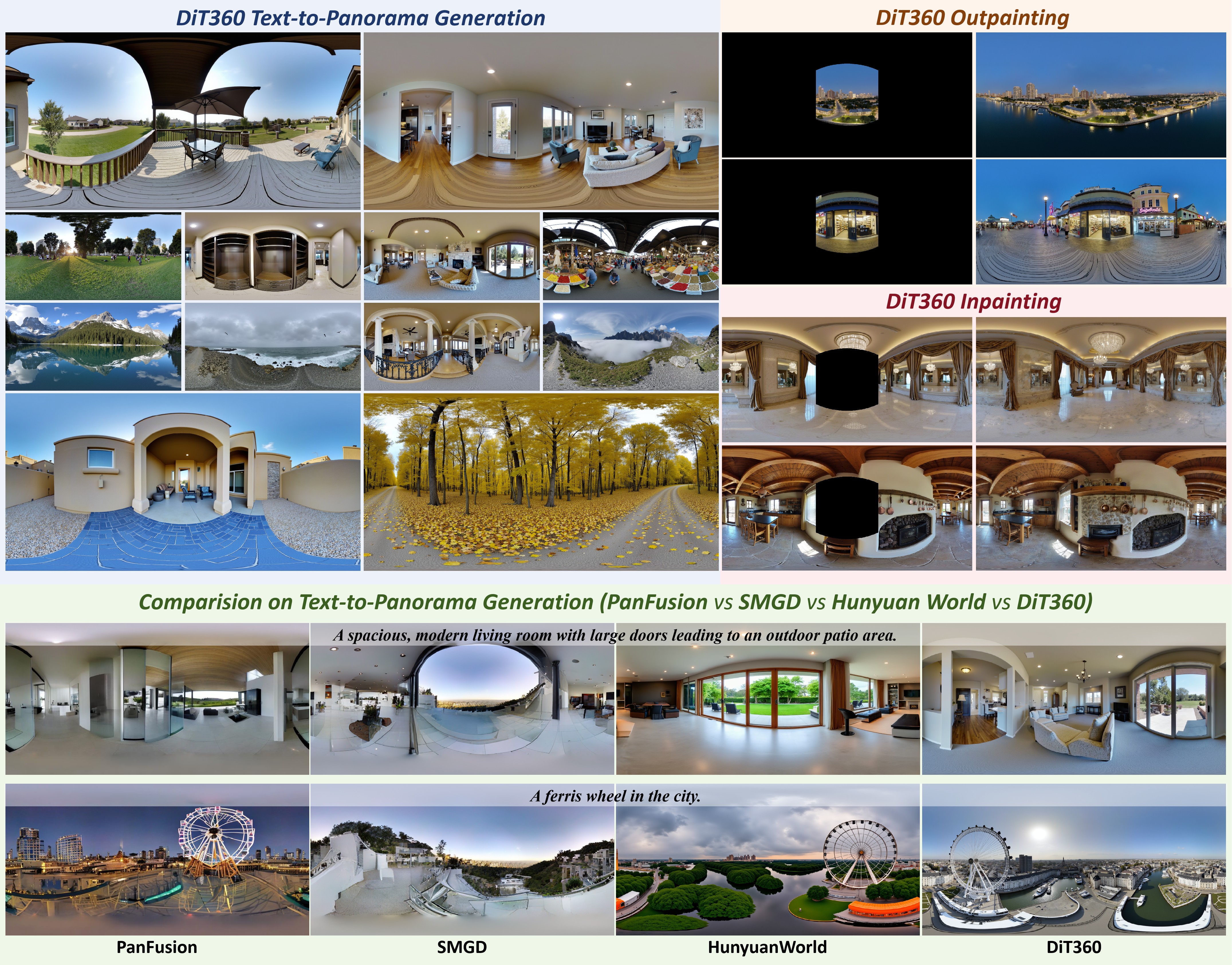

Insta360's research team released DiT360, an open source system for generating high quality 360 degree panoramic images from text descriptions. The project addresses a specific technical challenge that makes panorama generation different from standard image creation: maintaining seamless connections where the left and right edges meet.

Regular diffusion models produce images with distinct borders. That works fine for standard photos but creates visible seams in 360 degree panoramas where the rightmost and leftmost edges need to connect perfectly. DiT360 solves this through techniques designed specifically for spherical image formats.

The code, pretrained models, and dataset are all available now. Filmmakers and content creators can use the system to generate panoramic backgrounds, location concepts, or immersive environments without expensive 360 degree camera shoots.

DiT360 generated indoor panoramic scenes

The Technical Challenge: Seamless 360 Degree Continuity

A 360 degree panoramic image captures the entire horizontal field of view. When displayed as an equirectangular projection, the standard format for spherical images, these panoramas appear as rectangular images where the left edge must connect seamlessly with the right edge.

Standard image generation models don't account for this wraparound property. They treat images as having distinct borders. Generate a panorama with a regular model and you'll likely see a visible discontinuity where left meets right, objects cut in half, lighting mismatches, or perspective problems.

The challenge gets more complex because the model needs to maintain this continuity throughout the entire generation process, not just in the final output. The diffusion process iteratively refines the image through multiple steps. At each step, the wraparound constraint must hold.

How DiT360 Works

DiT360 builds on diffusion transformer architectures, applying modifications that account for spherical geometry. The system uses several technical strategies to maintain seamless wraparound:

Circular padding during generation. The model treats the image as having periodic boundary conditions in the horizontal direction. Information from the right edge influences generation on the left edge and vice versa throughout the process.

Spherical position encoding. Standard position encodings assume Euclidean geometry. DiT360 uses encodings appropriate for spherical surfaces, helping the model understand spatial relationships on a sphere rather than a flat plane.

Training on 360 degree data. The model trains specifically on equirectangular panoramic images, learning the statistical patterns and geometric properties inherent to this format.

Seamless stitching during VAE decoding. The variational autoencoder that decodes latent representations to pixel images applies blending techniques at the wraparound boundary to ensure smooth transitions.

These modifications work together to produce panoramas where the left and right edges connect naturally without visible seams, lighting discontinuities, or geometric distortions.

What You Can Generate

The system handles diverse scene types. The examples on the project page show indoor spaces, outdoor landscapes, architectural environments, and natural settings.

DiT360 generated outdoor panoramic landscapes

Indoor scenes demonstrate the model's ability to handle complex layouts with furniture, walls at different angles, windows, and varied lighting. The spatial coherence remains consistent even as the view wraps around the full 360 degrees.

Outdoor scenes show natural environments, urban landscapes, and architectural exteriors. The model maintains horizon alignment, perspective consistency, and natural lighting across the entire panoramic view.

The quality is high enough for practical use in virtual production, game development, architectural visualization, and VR content creation. Resolution and detail levels support these professional applications.

Open Source and Accessible

Everything needed to use or build on DiT360 is publicly available:

Code repository. The GitHub repository contains the full implementation, training scripts, and inference code. Developers can run the model locally, modify it for specific needs, or integrate it into larger pipelines.

Pretrained models. Hugging Face hosts the trained model weights. You can download and use them immediately without training from scratch. This matters because training high quality panorama models requires substantial computational resources and large datasets.

Demo interface. A Hugging Face Space provides a web interface for trying the system. Enter a text prompt, adjust parameters like seed and inference steps, and generate panoramas through your browser without local setup.

Dataset. The training data used to develop DiT360 is also available. Researchers can analyze what the model learned from, use the data for other projects, or expand it with additional panoramic images.

This complete open source release enables both practical use and further research. Filmmakers can generate content immediately. Researchers can improve the techniques or adapt them to related problems.

Practical Applications for Filmmakers

DiT360 enables several workflow improvements for film and video production:

Virtual production backgrounds. Generate panoramic environments for LED volume stages or green screen replacement. The 360 degree coverage means the background remains consistent regardless of camera position or movement.

Location scouting and concept visualization. Create panoramic concepts of potential filming locations before committing to physical scouts. Test different environmental options quickly during pre production.

VR and immersive content. Produce backgrounds and environments for virtual reality experiences. The seamless 360 degree format is native to VR display requirements.

Architectural and interior visualization. Generate panoramic views of spaces for set design approval, location references, or establishing shots in animations.

Game development environments. Create skyboxes and environment maps for 3D games and interactive experiences. The panoramic format is standard for these applications.

Training data generation. Produce synthetic panoramic images to augment datasets for computer vision research, particularly for tasks involving 360 degree cameras or spherical projections.

The quality and seamlessness of DiT360 outputs make them suitable for these professional uses rather than just experimental demonstrations.

Technical Details for Developers

For those implementing DiT360 or building on the research, several technical aspects matter:

Architecture foundation. The system builds on diffusion transformer models, which have shown strong performance in image generation tasks. The modifications for 360 degree generation integrate into this proven architecture rather than requiring a completely novel design.

Equirectangular format. DiT360 generates images in equirectangular projection, the standard format for representing spherical images as 2D rectangles. This format is widely supported by 360 degree viewers, VR headsets, and panoramic image processing software.

Resolution capabilities. The pretrained model generates at resolutions suitable for professional applications. Higher resolutions are possible with additional computational resources.

Inference parameters. Users can control the generation process through parameters like random seed for reproducibility, number of inference steps trading speed for quality, and guidance scale affecting prompt adherence.

Computational requirements. Running the model requires GPU hardware, with memory requirements depending on desired resolution. The Hugging Face demo runs on cloud infrastructure, making the capability accessible without local GPU setup.

Integration options. The code structure allows integration into larger pipelines. Generate panoramas programmatically, batch process multiple prompts, or combine with other tools for panoramic video generation or 360 degree scene composition.

How DiT360 Compares to Other Approaches

Several methods exist for creating 360 degree panoramic content, each with different tradeoffs:

Traditional 360 degree cameras. Physical cameras like Insta360 products capture real panoramic images through multiple lenses and stitching. This provides photorealistic results but requires being in the actual location and dealing with camera visibility in the final image.

Panoramic photography stitching. Taking overlapping photos and stitching them into panoramas works well but requires careful shooting technique and post processing. Lighting changes between shots can create visible seams.

3D rendering engines. Game engines and 3D software can render panoramic images from virtual scenes. This offers complete control but requires building the entire 3D environment first.

Other AI panorama methods. Previous AI approaches to panorama generation often struggled with seamless wraparound or produced lower quality results. DiT360's focus on diffusion transformers with spherical geometry awareness produces notably better seamlessness.

DiT360 offers a middle ground: generate high quality panoramic environments from text descriptions without physical presence, camera equipment, 3D modeling skills, or problematic seams. The tradeoff is less control over exact details compared to photography or 3D rendering.

Current Limitations and Future Directions

Like all AI generation systems, DiT360 has limitations worth understanding:

Text prompt interpretation. The system's ability to generate exactly what you describe depends on how clearly the concept exists in its training data. Unusual combinations or very specific details may not render as intended.

Fine detail control. While overall scene composition and style respond to prompts, controlling specific small details (exact object placement, precise architectural features) remains challenging.

Consistency across multiple generations. Generating multiple related panoramas with consistent style and elements requires careful prompting and possibly post processing.

Resolution ceiling. While adequate for many applications, the resolution has practical limits imposed by memory constraints and model architecture.

Static images only. DiT360 generates still panoramic images, not panoramic video. Creating 360 degree video requires additional techniques.

Future development might address these through larger models, better training data, conditioning mechanisms for finer control, or extensions to temporal generation for panoramic video.

Getting Started with DiT360

Using DiT360 requires choosing an approach based on your technical comfort and needs:

Browser demo. The Hugging Face Space at https://huggingface.co/spaces/Insta360-Research/DiT360 provides immediate access. Enter a text prompt describing your desired scene, adjust optional parameters, and generate. This works for testing, one off generations, or users without local GPU hardware.

Local installation. The GitHub repository at https://github.com/Insta360-Research-Team/DiT360 contains installation instructions. Clone the repo, install dependencies, download pretrained weights from Hugging Face, and run inference locally. This gives more control, privacy for sensitive projects, and ability to modify the code.

Model integration. Developers building larger systems can import DiT360 as a component. The Hugging Face model hub provides API access. Generate panoramas programmatically as part of automated pipelines.

Dataset exploration. Researchers interested in panoramic image properties can access the training dataset. Analyze what makes effective 360 degree content or use it to train related models.

Documentation on the project page and in the repository covers setup steps, parameter descriptions, and example usage.

The Broader Context: AI and Panoramic Content

DiT360 represents progress in a specific but important area of AI content generation. Panoramic and spherical formats matter for several growing application domains:

Virtual reality. VR experiences fundamentally require 360 degree content. AI generation of panoramic environments accelerates VR content creation.

Immersive media. As platforms and devices support more immersive viewing, demand for 360 degree content grows. Tools that generate this content efficiently become increasingly valuable.

Architectural visualization. Presenting spaces through 360 degree views offers clients a more complete sense of environments compared to standard perspective images.

Autonomous systems. Self driving vehicles and robots often use 360 degree camera systems. Synthetic panoramic data generation aids in training perception systems.

Gaming and metaverse. Virtual worlds require vast amounts of environmental content. AI generation helps populate these spaces more efficiently than manual 3D modeling.

DiT360's open source release accelerates development across these domains by providing a capable tool and establishing technical approaches others can build on.

Key Takeaways About DiT360

Insta360 Research released DiT360 as a complete open source project for generating 360 degree panoramic images from text. The system addresses the specific technical challenge of maintaining seamless wraparound where left and right edges meet.

The release includes code on GitHub, pretrained models on Hugging Face, a browser demo for immediate testing, and the training dataset. Everything needed to use or extend the system is publicly available.

Applications span virtual production backgrounds, VR content creation, architectural visualization, game development, and location concept generation. The quality supports professional use rather than just experimentation.

Technical approach combines diffusion transformers with modifications for spherical geometry including circular padding, spherical position encoding, and seamless VAE decoding.

Limitations include text prompt interpretation challenges, limited fine detail control, and static image generation rather than video. Future work might address these through larger models and better conditioning mechanisms.

The project represents useful progress in AI panoramic content generation and demonstrates the value of complete open source releases including code, models, and data.