LTX-2: The Open Source 4K AI Video Model That Changes Everything

Share this post:

LTX-2: The Open Source 4K AI Video Model That Changes Everything

LTX-2 demonstration showing native 4K video generation with synchronized audio. Source: Lightricks

Lightricks just redefined what open source means for AI video generation. On January 6, 2026, the company released LTX-2, the first production ready AI video model combining native 4K output, synchronized audio, and truly open weights with full training code. This isn't

another proprietary model with API access only. This is complete source code, model weights, and inference tooling that runs on consumer GPUs you already own.

The release matters because it eliminates the three barriers that previously separated professional filmmakers from AI video tools: quality ceiling, licensing uncertainty, and hardware requirements. LTX-2 generates native 4K at 50fps with synchronized audio up to 10 seconds, runs locally on a single NVIDIA RTX 4090, and carries permissive licensing free for commercial use under $10 million annual recurring revenue.

For independent filmmakers, this represents the moment AI video generation becomes practical production tool rather than expensive experiment. The technology that required cloud computing budgets and proprietary licensing now runs on workstation hardware with source code you can customize.

Try LTX-2 text-to-video generation in AI FILMS Studio to experience native 4K generation firsthand.

New: Want to run LTX-2 locally on your RTX GPU? Check out our complete installation guide covering ComfyUI setup, 4K workflows, and optimization tips following Nvidia's CES 2026 updates.

Don't have the hardware or prefer not to install? Use LTX-2 instantly on AI FILMS Studio with no installation required. Generate 4K videos directly in your browser with cloud-based GPUs and zero setup.

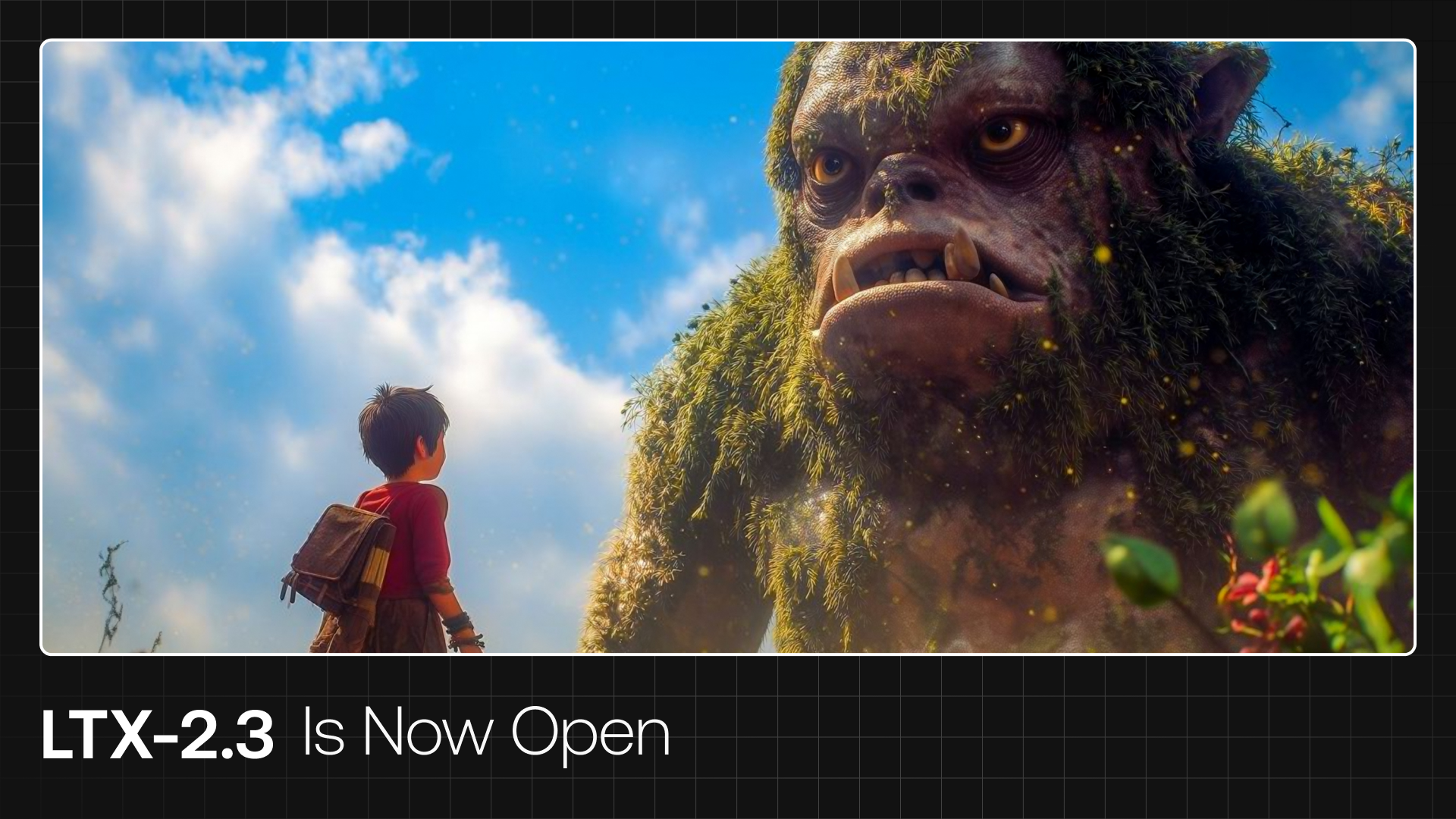

Update: Lightricks has released LTX-2.3, an upgraded version with improved audio visual quality, faster 8-step distillation, and new spatial and temporal upscalers.

What LTX-2 Actually Does

LTX-2 generates video and audio simultaneously in a single unified process. You provide text prompts, optional image references, depth maps, or short video snippets. The model outputs synchronized video with matching audio where motion, dialogue, ambient sound, and music align naturally without separate stitching or manual syncing.

The synchronized generation solves the awkward problem every AI video creator faces: separately generated audio never quite matches the visual timing. Footsteps land off beat. Music swells arrive frames too late. Ambient sound feels pasted on. LTX-2 generates both streams together, so when a character moves, the audio responds with correct spatial positioning and timing.

Technical implementation uses a latent diffusion backbone combined with transformer based temporal attention. The diffusion component handles spatial detail by denoising from a compact latent space rather than raw pixels, keeping 4K feasible on consumer GPUs. The transformer layers track time, treating frames as connected sequence rather than isolated images. This architecture explains why LTX-2 maintains temporal consistency where other models flicker or jitter.

The model accepts multimodal inputs beyond text: image references for style guidance, depth maps for spatial control, audio cues for soundtrack direction, and reference video for motion patterns. This flexibility enables precise creative direction rather than hoping random generations eventually produce usable results.

Try LTX-2 image-to-video animation to transform static images into dynamic footage with synchronized audio.

The Open Source Distinction

"Open source" in AI often means different things. Some companies release inference code but keep model weights proprietary. Others share weights but withhold training methodology. LTX-2 provides complete package: model architecture, pre trained weights, training code, datasets, and inference tooling.

The January 6, 2026 release includes everything needed to run, modify, fine tune, or completely retrain the model. Researchers can examine training approaches. Developers can customize for specific use cases. Studios can fine tune on proprietary visual styles. The transparency extends to training data sources, all licensed from Getty Images and Shutterstock, eliminating copyright concerns that plague other AI video models.

Licensing follows clear structure: free for academic research and free for commercial use by companies under $10 million annual recurring revenue. Organizations above that threshold require commercial license, which grants continued access to the same open weight model with enterprise support options. This tiered approach lets indie creators and startups use production grade AI video without upfront costs while ensuring Lightricks gets compensated by larger enterprises.

The distinction matters for production planning. Proprietary models create vendor lock in where your creative work depends on continued API access and acceptable pricing. Open weights mean you can run inference indefinitely on your own hardware regardless of company decisions or pricing changes. Training code access means you can adapt the model as your needs evolve rather than hoping the vendor adds features you require.

Hardware Requirements and Performance

LTX-2 runs on consumer NVIDIA GPUs with minimum 12GB VRAM for 720p to 1080p drafts at 24-30fps using fp16 precision and efficient attention. With 16-24GB VRAM, 1080p to 1440p becomes practical and 4K possible with tiling techniques. A 24GB card like RTX 4090 comfortably handles native 4K, 10 second clips, up to 50fps.

Actual generation times on RTX 4090: 10 second 4K clip with 30-36 diffusion steps completes in 9-12 minutes. Same clip on RTX 3090 takes roughly 20-25 minutes. For rapid iteration, 1080p drafts render in 2-4 minutes on 4090. These speeds make AI video practical for iterative creative work rather than overnight batch processing.

Audio generation adds approximately 10-15% to overall render time, negligible compared to the visual pass. The synchronized approach proves more efficient than generating video first then attempting to match audio separately.

NVIDIA ecosystem optimization extends from consumer GeForce RTX GPUs through professional workstations to enterprise data center deployment. Quantization to NVFP8 reduces model size by approximately 30% and improves performance up to 2x. ComfyUI integration provides further optimization, enabling efficient workflow for users already familiar with that ecosystem.

For detailed step-by-step instructions on setting up LTX-2 with ComfyUI on your RTX GPU, including installation commands, workflow configuration, and performance optimization, see our comprehensive guide to running LTX-2 in 4K.

The on device capability matters beyond performance. Running locally ensures complete privacy for unreleased intellectual property. Studios can iterate on confidential projects without uploading to cloud services. Enterprises maintain regulatory compliance by keeping all data processing internal. Creators work without bandwidth limitations or usage caps.

Creative Control and Customization

LTX-2 provides frame level control through multi keyframe conditioning, 3D camera logic, and LoRA fine tuning. Multi keyframe conditioning lets you specify visual state at multiple points in the sequence rather than only start and end frames. The model interpolates motion between keyframes while maintaining coherence, enabling precise choreography for complex sequences.

3D camera logic understands spatial relationships and motion through virtual space. When you specify camera movements like dolly in, pan left, or crane up, the model generates appropriate perspective changes, depth cues, and motion blur. This matches cinematography language filmmakers already use rather than hoping AI interprets abstract descriptions correctly.

LoRA (Low Rank Adaptation) fine tuning enables efficient customization on specific visual styles or subject matter. Full fine tuning requires substantial compute and data. LoRA achieves similar results with fraction of resources by training small adapter layers rather than entire model. Studios can fine tune on their visual identity, create custom control models for specific effects, or adapt the base model for particular genres.

The Lightricks team provides separate repository specifically for fine tuning: LTX-Video-Trainer supports both 2B and 13B model variants, enables full fine tuning and LoRA approaches, and includes workflows for control LoRAs (depth, pose, canny) and effect LoRAs (specialized transformations). The comprehensive documentation lowers barriers to customization.

Production Workflow Integration

LTX-2 API integration demonstration showing workflow possibilities. Source: Lightricks

LTX-2 integrates into existing production pipelines through multiple pathways. The self serve API provides tiered access: Fast mode starts at $0.04 per second for rapid iteration and previews, Pro mode at $0.08 per second balances efficiency and quality for daily production work, Ultra mode at $0.16 per second delivers maximum 4K fidelity at 50fps for final deliverables.

Local deployment runs entirely on your infrastructure without cloud dependencies. The GitHub repository includes complete inference code, example workflows, and integration guides. Developers can build custom pipelines incorporating LTX-2 alongside other production tools without external API calls or usage tracking.

ComfyUI integration provides node based visual workflow for creators preferring graphical interface over code. The implementation includes optimized performance improvements and supports specialized control models. Users already comfortable with ComfyUI for stable diffusion workflows can add LTX-2 video generation with minimal learning curve.

The flexibility matters for different production scales. Solo creators might use ComfyUI on local GPU for client projects. Small studios might deploy on private servers for team access. Enterprises might integrate via API for scalable infrastructure. The same model serves all scenarios without forcing specific deployment approach.

What the Model Handles Well

Motion consistency across frames represents LTX-2's clearest strength. Camera moves maintain perspective accuracy. Moving subjects don't morph or flicker. Background elements stay stable rather than flowing like liquid. When prompts specify "slow dolly in" or "handheld documentary style," the generated motion matches cinematography conventions.

Texture detail at 4K resolution exceeds expectations for AI generated content. Fabric shows weave patterns. Skin displays pore detail. Foliage renders individual leaves rather than smeared vegetation. The latent diffusion approach preserves fine detail that pixel space generation often loses at high resolutions.

Lighting coherence helps scenes feel physically plausible. Shadows fall correctly based on light source positions. Reflections appear in appropriate surfaces. Depth of field creates believable bokeh in out of focus areas. When specific lighting like "film noir" or "golden hour" appears in prompts, the model applies consistent lighting logic rather than random bright and dark patches.

Synchronized audio timing aligns with on screen action naturally. Footsteps land when feet hit ground. Door slams occur when doors close. Music accents match visual beats. The unified generation process creates temporal alignment that separate audio generation struggles to match through post processing.

Current Limitations and Practical Considerations

Fine text rendering still presents challenges. Small typography shimmers or becomes illegible across frames. If your production requires readable signage or text overlays, generate those separately and composite them in post. The limitation affects most current AI video models, not specific to LTX-2.

Character consistency across shots requires careful prompting or reference images. Without guidance, the same character may appear with different features in subsequent generations. For projects requiring recognizable characters across multiple shots, use image references or fine tune on specific character appearances.

Complex physics beyond basic motion patterns exceed current capability. Detailed liquid simulation, realistic cloth draping under complex forces, or intricate mechanical interactions may not generate accurately. The model handles believable approximations of these phenomena but not simulation grade physics.

Audio quality works well for ambient sound, music beds, and simple dialogue but doesn't replace professional recording for critical voice work. Use generated audio as temporary track for timing, then replace with properly recorded dialogue or licensed music for final delivery.

The 10 second maximum clip length requires planning for longer sequences. Generate shots separately then edit together, or use video extension techniques if maintaining continuity across longer duration. The limitation reflects current training approaches rather than fundamental technical ceiling.

Commercial Use and Licensing Clarity

The licensing structure removes ambiguity that plagues many AI tools. Academic research: completely free, no restrictions. Commercial use under $10 million annual recurring revenue: completely free, no licensing fees. Commercial use over $10 million ARR: commercial license required with negotiated terms.

This clarity matters for production planning. Independent filmmakers know exactly where they stand. Small production companies can use the model for client work without legal uncertainty. Growing companies understand at what point they'll need licensing arrangements.

The threshold at $10 million ARR provides substantial room before licensing becomes concern. A boutique studio billing $500,000 annually can use LTX-2 freely for two decades before approaching the threshold. Most independent creators never reach that scale.

For enterprises requiring licenses, Lightricks offers flexibility: continued use of open weight model (not forced API migration), enterprise grade support options, deployment customization assistance, and model adaptation consultation. The licensing requirement funds continued development while keeping the model accessible to smaller operations.

Training data licensing through Getty Images and Shutterstock eliminates copyright concerns. Unlike models trained on scraped internet content with questionable legal status, LTX-2 uses fully licensed training data. This matters for commercial work where clients demand clear licensing chains for all production elements.

Getting Started Resources

Model weights and code:

- Primary repository: https://github.com/Lightricks/LTX-2

- Model weights: https://huggingface.co/Lightricks/LTX-2

- Training repository: https://github.com/Lightricks/LTX-Video-Trainer

API and platform access:

- Official API: https://ltx.io/model

- LTX Studio platform: https://ltx.io

Documentation and support:

- Technical documentation in GitHub repository README

- Example workflows and prompts in repository examples directory

- Community discussions in GitHub issues and discussions

- Official Lightricks Discord for community support

Hardware recommendations:

- Minimum: 12GB VRAM (RTX 3060 12GB, RTX 3080 10GB with reduced settings)

- Recommended: 16-24GB VRAM (RTX 3090, RTX 4080, RTX 4090)

- Optimal: 24GB+ VRAM (RTX 4090, professional cards)

- CPU: Modern multi core processor

- RAM: 32GB system memory recommended

- Storage: 100GB+ for model weights and generated content

Installation and setup tutorial:

- Complete guide to running LTX-2 in 4K on RTX GPUs: Step-by-step ComfyUI installation, model configuration, workflow setup, and troubleshooting tips following CES 2026 updates

Why This Matters for Filmmakers

The gap between professional production capabilities and independent creator budgets just narrowed dramatically. Shots requiring expensive camera rigs, inaccessible locations, or complex VFX become achievable through careful prompting and iteration. The democratization doesn't eliminate cinematography skill, it removes budget as primary barrier to executing vision.

Consider production scenarios newly viable:

- Establishing shots of difficult locations without travel costs

- Complex camera movements without equipment rental

- Visual effects shots without outsourcing to VFX houses

- Rapid prototyping of sequences before committing to expensive shoots

- Generating B roll when original footage proves insufficient

- Creating entirely AI native short form content for social platforms

The creative opportunity extends beyond cost reduction. AI video enables experimentation impossible through traditional production. Generate 20 variations of a shot with different lighting, try unconventional camera angles without risking expensive equipment, or explore visual styles impractical for physical production.

The open source nature protects creative investment. Work you create today remains accessible regardless of company decisions or market conditions. Models you customize become assets you control. The production pipeline you build around LTX-2 stays functional whether Lightricks continues development or not.

Integration with Traditional Production

LTX-2 complements rather than replaces traditional filmmaking. The model excels at generating elements difficult or expensive through practical production: impossible camera moves, fantastical environments, rapid visualization of concepts. Traditional shooting still handles elements where physical presence matters: human performances, practical stunts, unique real world locations.

Smart integration uses each approach where it provides advantage. Shoot principal photography with actors and practical sets. Generate establishing shots, impossible angles, and VFX elements. Combine both in editing to create final sequence that maximizes quality while minimizing budget.

The tool enables different production planning. Rather than eliminating shots from scripts due to budget constraints, generate them through AI and allocate shooting budget to elements requiring physical production. The flexibility expands creative options rather than forcing choice between vision and budget reality.

For post production workflows, LTX-2 provides capabilities previously requiring specialized software: extend shots beyond filmed duration, create transitional elements between cuts, generate fill footage for pacing adjustments. The generation speed makes these additions practical during editing rather than requiring separate VFX phase.

The Broader Implications

LTX-2 represents first truly open source model competitive with proprietary offerings. Previous open source AI video models lagged significantly in quality, required enterprise hardware, or lacked essential features like audio generation. This release eliminates those compromises.

The precedent matters beyond this specific model. If open source AI video can match closed development quality, the same pattern should apply to other AI domains. The community gains proof that cooperative development plus transparency can deliver professional results without sacrificing capability.

For the AI research community, full access to training code and methodology accelerates innovation. Researchers can build on Lightricks' work rather than re implementing from papers. Academic labs with limited budgets can fine tune state of the art models rather than training from scratch. The collaborative approach speeds overall progress.

For creative software vendors, LTX-2 establishes new baseline for AI video capability. Proprietary models must now justify their closed nature through superior results, unique features, or substantially better user experience. Simple access to adequate AI video no longer commands premium pricing when open source alternative exists.

The business model validation proves important. Lightricks makes LTX-2 freely available to the majority of users while monetizing enterprise access, API usage, and integrated platforms. This approach funds continued development without restricting creator access. If successful, other companies may adopt similar strategies, expanding open source AI while maintaining commercial viability.

What to Expect Next

Lightricks committed to continued development through the "Open Creativity Stack" initiative. Future releases will integrate additional models and capabilities, expand fine tuning options and control mechanisms, improve performance and reduce hardware requirements, and extend generation length and quality.

The open source community will likely contribute significantly: custom fine tunes for specific visual styles, integration with additional creative tools, optimization for different hardware configurations, and novel applications beyond video generation.

Real time generation becomes realistic goal within 12-18 months. Current inference speeds approach interactive latency on high end hardware. Continued optimization could enable live direction of AI generation, opening entirely new creative workflows where filmmakers direct AI output in real time rather than waiting for batch processing.

Longer form generation through improved architectures or chaining approaches will address the 10 second limitation. Techniques like video extension, seamless stitching, or fundamentally longer context windows could enable minute plus continuous generations while maintaining consistency.

Quality improvements through continued training, architecture refinements, or hybrid approaches combining multiple models will address current limitations. Fine text rendering, complex physics, and character consistency all represent active research areas with clear paths toward improvement.

Practical Advice for Getting Started

Start with clear achievable goals rather than attempting feature film production immediately. Generate short establishing shots for existing projects. Create social media content where 10 second maximum aligns naturally with platform requirements. Experiment with B roll generation to supplement traditional footage.

You can start with LTX-2 text-to-video for prompt-based generation or try image-to-video to animate existing storyboard frames or concept art.

Invest time learning effective prompting. Specific cinematography language produces better results than vague descriptions. "Slow push in, shallow depth of field, golden hour lighting" generates more useful output than "nice looking shot." Study the example prompts in repository documentation and community discussions to understand what works.

Build a prompt library documenting successful generations. When you create something useful, save the exact prompt, settings, and any reference images. This knowledge base accelerates future work by providing starting points rather than beginning from scratch each generation.

Combine AI generated content thoughtfully with traditional footage. The blend works best when each approach serves its strengths. Don't force AI generation where practical shooting would be simpler or better. Don't shoot expensively what AI could generate adequately.

Participate in the community. GitHub discussions, Discord channels, and creative forums provide faster learning than solo experimentation. Share your successes and failures, both contribute to collective knowledge that benefits everyone using the tool.

The Opportunity for Independent Creators

For the first time, independent filmmakers have access to production grade AI video generation without API costs, usage restrictions, or dependency on proprietary platforms. The capability runs on consumer hardware many creators already own. The licensing permits commercial work without fees. The source code enables customization without vendor approval.

This combination removes barriers that previously limited AI video to well funded studios or experimental personal projects. Productions can integrate AI generation as standard production technique rather than expensive novelty requiring special budget allocation.

The timing coincides with broader acceptance of AI tools in creative work. Audiences increasingly care about results rather than production methods. The stigma previously attached to AI generated content diminishes as quality improves and integration becomes sophisticated. LTX-2's quality enables seamless blending with traditional footage where AI contribution becomes invisible.

For creators building businesses, the licensing clarity and open weights provide stable foundation. Client work doesn't risk future access issues. Business growth doesn't trigger unexpected licensing costs until substantial scale. Infrastructure investment in workflows and customization pays long term dividends rather than becoming stranded assets if vendors change policies.

The opportunity extends beyond cost savings to capability expansion. Creators can attempt projects previously impossible at their budget level. The risk reward calculation for experimental work changes when production costs drop dramatically. More attempts mean more successes, accelerating creative development and portfolio building.

Start experimenting now with LTX-2 in AI FILMS Studio using text-to-video generation or image-to-video animation while the technology continues rapid advancement. LTX-2 represents current state of the art, but the pace of improvement suggests even more capable open source models within 12-18 months. Early adopters develop expertise that compounds as tools improve.